95% of organisations implementing AI technologies report no measurable return on investment, whilst AI-generated “workslop” costs a typical 10,000-person organisation over £9 million annually in lost productivity.

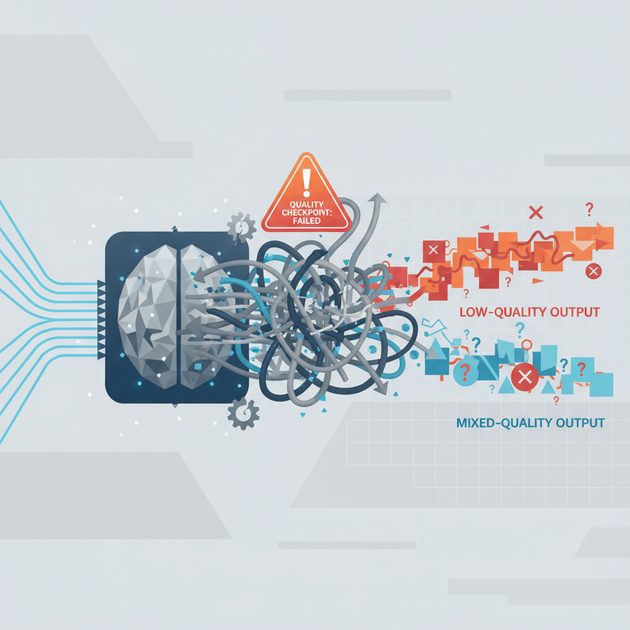

The paradox is stark: whilst AI promises unprecedented efficiency gains, the reality for most organisations is a productivity decline driven by poor-quality outputs that shift cognitive labour downstream to human colleagues.

This represents the largest gap between AI promise and AI delivery in the technology’s brief commercial history. Understanding why requires examining not just the technology, but the organisational and human factors that determine AI success or failure.

Strategic Context

The business problem AI was meant to solve—labour-intensive knowledge work requiring speed and scale—has instead created a new category of organisational waste. “Workslop,” defined as AI-generated output requiring significant human remediation, has become the dominant experience for employees encountering AI at work.

The Real Story Behind the Headlines

Beyond the technical capabilities marketed by AI vendors lies a more complex implementation reality. When employees receive AI-generated content requiring interpretation, fact-checking, or complete rework, the promised time savings evaporate. Instead, organisations experience what researchers term “cognitive labour displacement”—work isn’t eliminated, it’s simply moved to different people with different skills.

Critical Numbers

| Metric | Impact | Annual Cost (10K employees) |

|---|---|---|

| Zero ROI projects | 95% of AI implementations | £9.3M in lost productivity |

| Monthly workslop exposure | 41% of employees affected | £186 per incident |

| Trust degradation | 50% see AI users as less creative | Immeasurable cultural damage |

| Effectiveness loss | 42% reduced trust in AI colleagues | £1,860 per affected employee |

Deep Dive Analysis

What’s Really Happening

The operational transformation promised by AI vendors assumes perfect prompt engineering, consistent context provision, and seamless human-AI collaboration. In practice, most organisations deploy AI tools without strategic frameworks, leaving employees to discover effective usage patterns through trial and error.

The critical insight: AI tools deployed without strategic implementation frameworks don’t reduce work—they create new categories of quality assurance work that didn’t previously exist.

Success Factors Often Overlooked

- Prompt engineering expertise: Creating consistent, high-quality outputs requires specialised skills most organisations lack

- Context architecture: AI systems need structured information environments to produce relevant outputs

- Human-in-the-loop governance: Quality controls must be designed into workflows, not added retrospectively

- Cultural change management: Teams require training on when AI adds value versus when traditional approaches remain superior

The Implementation Reality

Most AI implementations follow a pattern: initial enthusiasm, rapid deployment across multiple use cases, declining output quality as novelty wears off, and eventual abandonment or severe restriction of AI tools. This cycle occurs because organisations focus on technology adoption rather than capability development.

⚠️ Critical Risk: Without proper implementation frameworks, AI tools become productivity debt—creating more work than they eliminate whilst degrading team trust and collaboration.

Strategic Analysis

Beyond the Technology: The Human Factor

The most significant barrier to AI success isn’t technical—it’s organisational. When employees perceive AI-generated content as requiring more oversight than traditional outputs, they naturally develop risk-averse behaviours that negate potential efficiency gains.

Stakeholder Impact Analysis

| Stakeholder | Primary Impact | Support Required | Success Metrics |

|---|---|---|---|

| Managing Directors | ROI disappointment, strategic uncertainty | Clear implementation frameworks | Measurable productivity gains |

| Department Heads | Team resistance, quality concerns | Change management support | Team adoption rates, output quality |

| Individual Contributors | Increased workload, tool frustration | Skills training, clear guidelines | Time savings, reduced rework |

| IT Teams | Integration complexity, security concerns | Governance frameworks | System reliability, compliance |

What Actually Drives Success

Traditional metrics focus on adoption rates and feature usage rather than business outcomes. Successful AI implementations require redefining success around:

- Quality consistency: AI outputs meeting standards without human intervention

- Cognitive load reduction: Actual time savings for knowledge workers

- Trust maintenance: Preserving team collaboration and professional relationships

🎯 Success Redefinition: Measure AI value by work eliminated, not work generated. If human review time exceeds AI generation time, the implementation has failed.

Strategic Recommendations

💡 Implementation Framework: Success requires three phases: strategic assessment (identify high-value use cases), guided pilot implementation (develop organisational capabilities), and scaled deployment (maintain quality whilst expanding usage).

Priority Actions for Different Contexts

For Organisations Just Starting

- Conduct use case assessment: Identify 3-5 specific workflows where AI adds clear value

- Develop prompt engineering capabilities: Train teams on effective AI interaction patterns

- Establish quality frameworks: Define acceptable output standards before deployment

For Organisations Already Underway

- Audit current AI usage: Measure actual time savings versus promised benefits

- Implement governance frameworks: Create clear guidelines for AI tool usage

- Redesign workflows: Integrate AI as collaborative tools rather than replacement systems

For Advanced Implementations

- Optimise prompt engineering: Develop organisation-specific prompt libraries

- Scale successful patterns: Replicate high-performing use cases across teams

- Build internal expertise: Develop dedicated AI implementation capabilities

Hidden Challenges

Challenge 1: The Prompt Engineering Skills Gap

Most organisations lack employees with systematic prompt engineering expertise, leading to inconsistent outputs and user frustration.

Mitigation Strategy: Develop internal prompt engineering capabilities through structured training programmes and create organisation-specific prompt libraries for common use cases.

Challenge 2: Context Fragmentation

AI tools perform poorly when operating without sufficient context about organisational priorities, brand voice, and specific project requirements.

Mitigation Strategy: Implement context architecture frameworks that provide AI systems with relevant background information and maintain consistency across different users and projects.

Challenge 3: Quality Assurance Overhead

Reviewing AI outputs often requires more time than creating original content, particularly for complex or creative tasks.

Mitigation Strategy: Define clear use case boundaries where AI adds value and develop rapid quality assessment techniques to minimise review overhead.

Challenge 4: Cultural Resistance and Trust Erosion

When AI outputs consistently require significant rework, teams develop negative associations with AI tools and colleagues who use them.

Mitigation Strategy: Focus on use cases where AI clearly adds value, provide extensive training on effective usage patterns, and maintain transparent communication about AI capabilities and limitations.

Strategic Takeaway

The current AI productivity crisis stems from treating AI deployment as a technology implementation rather than an organisational capability development challenge. Success requires systematic approaches to prompt engineering, context provision, and human-AI collaboration.

Three Critical Success Factors

- Strategic Use Case Selection: Focus AI deployment on specific workflows where clear value exists rather than broad, general usage

- Capability Development: Invest in prompt engineering skills and context architecture before scaling AI usage

- Quality-First Implementation: Establish output standards and governance frameworks from day one rather than addressing quality issues retrospectively

Reframing Success

Traditional AI metrics measure activity (prompts generated, tools adopted) rather than outcomes (work eliminated, quality maintained). Successful organisations measure AI value through:

The ultimate test: Does AI usage reduce total human effort required to achieve specific business outcomes, whilst maintaining or improving output quality?

Your Next Steps

Immediate Actions (This Week)

- Audit current AI usage patterns and measure actual time impacts

- Identify 3-5 specific use cases where AI clearly adds value

- Establish quality standards for AI-generated outputs

Strategic Priorities (This Quarter)

- Develop prompt engineering capabilities within teams

- Create governance frameworks for AI tool usage

- Implement measurement systems for AI business impact

Long-term Considerations (This Year)

- Build internal AI implementation expertise

- Scale successful use cases across organisation

- Develop competitive advantage through effective AI integration

Source: AI-Generated Workslop Is Destroying Productivity

This strategic analysis was developed by Resultsense, providing AI expertise by real people. We help organisations navigate the complexity of AI implementation with practical, human-centred strategies that deliver real business value.