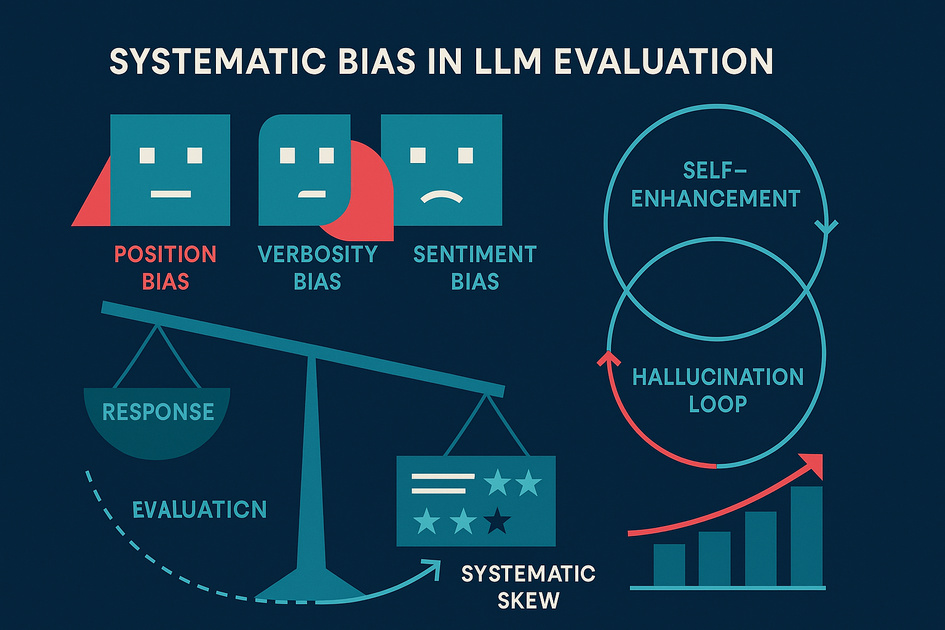

Recent research into large language model (LLM) evaluation systems has uncovered critical fairness issues that directly impact how businesses assess AI performance. The study, examining 12 different bias types across leading LLM judges, reveals that none of the current evaluation systems are immune to systematic inconsistencies—a finding with significant implications for organisations deploying generative AI solutions.

For UK SMEs investing in AI integration, these findings highlight why human oversight remains essential in AI evaluation processes. The research demonstrates that even the most sophisticated automated judges exhibit predictable biases that can skew assessment outcomes, potentially leading to flawed implementation decisions and unreliable AI deployments.

The LLM Judge Framework

The research establishes a formal framework for understanding LLM judges: a system that takes a prompt (consisting of system instructions, a query, and candidate responses) and produces an evaluation. The study tested fairness by creating semantically equivalent alternative prompts—maintaining the same meaning whilst changing context—and comparing outputs. In an ideal scenario, identical meanings should produce identical judgments. The reality proved far more complex.

Through large-scale analysis of 12 bias types, the researchers identified six particularly concerning patterns that affect real-world AI deployment decisions.

Six Critical Bias Patterns

Position Bias: Order Matters More Than Content

The most fundamental flaw discovered involves response ordering. When researchers swapped the position of identical candidate responses (changing “ABC” to “ACB”), many LLM judges produced inconsistent evaluations. This suggests that evaluation outcomes can be manipulated simply by reordering options—a critical vulnerability for businesses using automated assessment tools.

Verbosity Bias: Length Trumps Accuracy

Testing revealed divergent preferences regarding response length. Some judges favour longer explanations whilst others prefer brevity, but crucially, neither approach consistently prioritises correctness. This means businesses relying on automated evaluation may inadvertently reward verbosity over accuracy, or penalise comprehensive responses that happen to be lengthy.

Ignorance: Missing the Thinking Process

For models that generate internal reasoning traces before final answers, researchers found judges frequently ignore errors in the thinking process, focusing solely on final answer correctness. This represents a fundamental failure in evaluation comprehensiveness—akin to marking an exam based only on the final answer whilst ignoring flawed working that arrived at a correct conclusion by chance.

Distraction Sensitivity: Irrelevant Context Interference

When irrelevant context was deliberately added to prompts, many judges proved surprisingly sensitive to these distractions despite their lack of relevance to the actual question and response. This suggests that production AI systems may produce inconsistent evaluations when encountering unexpected or tangential information—a common scenario in real-world business applications.

Sentiment Bias: Emotional Tone Influences Technical Assessment

The research demonstrated that adding emotional elements to prompts affects evaluation outcomes, with most judges exhibiting a preference for neutral tones over either positive or negative emotional content. This bias could systematically disadvantage responses that appropriately use emotional language for emphasis or engagement, favouring bland neutrality over effective communication.

Self-Enhancement: The Echo Chamber Effect

Perhaps the most concerning finding involves what researchers term “self-enhancement bias.” When the same LLM both generates responses and judges their quality, there exists a strong preference for self-generated content. This represents a fundamental conflict of interest embedded in the evaluation process—equivalent to allowing exam markers to grade their own answers.

Business Implications for AI Implementation

These systematic biases constitute a form of evaluation hallucination—judges producing inconsistent outputs for semantically equivalent inputs. For businesses, this translates to several critical considerations:

Evaluation Reliability: Automated AI assessment tools cannot be trusted as sole arbiters of quality. The research demonstrates that seemingly objective technical evaluations may reflect position preferences, length biases, or self-serving patterns rather than genuine quality differences.

Implementation Risks: Organisations deploying generative AI based purely on automated evaluation metrics face the risk of selecting inferior solutions that happen to align with judge biases rather than genuine business requirements.

Quality Assurance Necessity: The findings reinforce the critical importance of human oversight in AI deployment. Technical evaluation must be supplemented with domain expertise and business context that automated judges cannot provide.

Strategic Approaches for UK SMEs

For businesses navigating AI implementation, this research suggests several practical safeguards:

Diverse Evaluation Frameworks: Avoid reliance on single evaluation systems. Cross-reference multiple judges and prioritise human validation of critical decisions.

Bias Awareness in Procurement: When selecting AI solutions, recognise that vendor demonstrations may exploit these known biases to present inflated performance metrics.

Structured Testing Protocols: Implement evaluation processes that specifically test for position bias, verbosity preferences, and self-enhancement patterns before production deployment.

Our AI implementation service addresses these challenges through structured evaluation frameworks that combine automated assessment with expert human oversight, ensuring deployment decisions reflect genuine business value rather than systematic evaluation biases.

The Path Forward

The research makes clear that current LLM judges suffer from consistency failures that undermine their reliability for critical business decisions. As generative AI evaluation tools are widely used for both assessing and improving AI technology, these fairness issues represent a systemic risk to effective AI deployment.

For UK businesses, the message is unambiguous: automated evaluation represents a useful tool, but one that requires careful calibration, diverse validation approaches, and sustained human expertise. The sophistication of evaluation systems should not be mistaken for infallibility—particularly when business outcomes depend on the reliability of those assessments.

Organisations that recognise these limitations and implement robust, multi-faceted evaluation approaches will be better positioned to extract genuine value from AI investments whilst avoiding the pitfalls of biased automated judgment.