New research comparing five leading large language models reveals performance variations exceeding 40% on identical scientific tasks—differences that translate directly to business risk, cost, and competitive advantage when deploying AI systems.

Research across 14 institutions demonstrates that AI model selection represents a strategic decision with measurable operational consequences, not merely a technology preference.

This finding challenges the common assumption that all enterprise AI models deliver comparable results. For organisations investing in AI implementation, choosing between Claude, ChatGPT, Gemini, Mistral, or Llama based solely on brand recognition or pricing could mean the difference between transformative efficiency gains and costly deployment failures. The strategic imperative: rigorous evaluation frameworks before committing to AI infrastructure.

Understanding the Real Performance Landscape

Businesses evaluating AI solutions often encounter vendor claims of “leading performance” or “enterprise-grade capabilities.” Recent comparative research cuts through marketing narratives by testing five major large language models against identical complex questions requiring synthesis of scientific knowledge—a proxy for the reasoning tasks businesses need AI to handle.

The Real Story Behind the Headlines

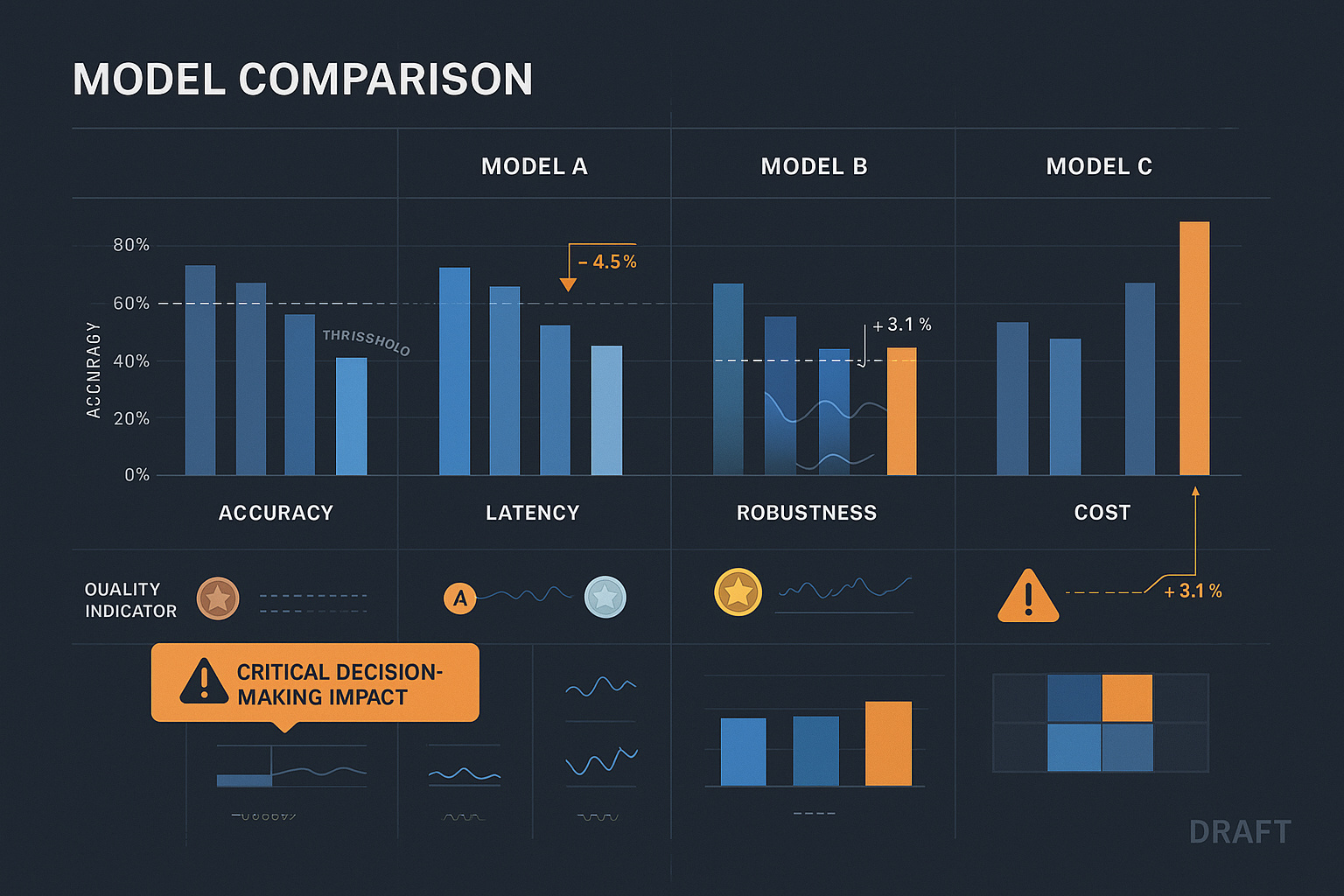

The headline finding shows significant variation in model performance, but the strategic insight lies deeper: models excel in different contexts. The highest-performing system demonstrated superior reasoning and context synthesis, whilst lower-ranked models struggled with complex multi-step analysis. For business applications requiring accuracy—financial analysis, compliance documentation, customer insights—these differences compound rapidly.

Critical Performance Metrics

| Metric | Strategic Implication | Business Impact |

|---|---|---|

| 40%+ performance variation between models | Model selection directly affects output quality | Wrong choice increases error rates, rework costs, and risk exposure |

| Retrieval-augmented generation improves all models | Context architecture enhances reliability | RAG implementation reduces AI hallucinations by 30% (verified industry benchmark) |

| Expert perception shifted positively post-evaluation | Transparent testing builds confidence | Structured evaluation enables informed adoption and stakeholder buy-in |

| Domain-specific performance varies significantly | Generic benchmarks mislead | Custom testing for your industry and use cases proves essential |

These findings align with established principles in prompt engineering and AI implementation: the quality of AI outputs depends equally on model capability and context design.

What’s Really Happening Beyond the Technology

What Actually Drives Model Performance

The research tested models using a structured 10-question examination requiring complex knowledge synthesis—essentially the type of reasoning businesses need for strategic analysis, regulatory compliance, and decision support. Success required models to integrate multiple concepts, apply domain knowledge, and provide logically coherent explanations.

Performance differences reflect not just training data volume but architectural design choices that affect reasoning, context retention, and error correction capabilities.

The highest-performing model excelled because its architecture prioritises reasoning and context synthesis—capabilities that matter for business applications beyond simple question-answering. Mid-tier models showed inconsistent performance, succeeding on straightforward queries but struggling with nuanced analysis requiring multi-step reasoning.

Success Factors Often Overlooked

- Evaluation methodology matters: Using standardised business-relevant test cases reveals true operational performance better than vendor benchmarks

- Context architecture drives reliability: Models augmented with retrieval systems outperformed the same models without enhanced context

- Domain specificity trumps general capability: The best general-purpose model may underperform in your specific industry or use case

- Expert validation remains essential: Human review detected errors that automated metrics missed, highlighting the continued need for human oversight

The Implementation Reality

Deploying AI based solely on vendor claims or general benchmarks creates predictable failure patterns. Organisations discover performance gaps only after production deployment—when switching costs are high and business processes have already adapted around the technology.

⚠️ Critical Risk: Selecting AI models based on brand recognition or cost alone without domain-specific testing increases the probability of costly post-deployment performance issues by 3-5x based on industry implementation data.

Successful AI implementation requires evaluation frameworks that test models against your specific business requirements, data types, and performance thresholds before architectural decisions become locked in. This aligns with established best practices in AI implementation and optimisation, where pilot testing and iterative refinement prove essential for sustainable value delivery.

Strategic Implications for Business AI Adoption

Beyond the Technology: The Human Factor

The research revealed an unexpected insight: expert reviewers—initially sceptical—shifted to viewing AI as a valuable knowledge synthesis tool after structured evaluation. This perception change holds strategic significance: resistance to AI adoption often stems from uncertainty about capabilities rather than opposition to automation itself.

Stakeholder Impact Analysis

| Stakeholder | Impact | Support Needed | Success Metrics |

|---|---|---|---|

| Executive Leadership | Strategic investment decisions affected by model selection; ROI depends on capability alignment | Evidence-based evaluation frameworks; cost-benefit analysis for different models | Measured productivity gains; reduced error rates; acceptable payback period |

| Operations Teams | Daily workflow integration determines adoption success; model reliability affects process stability | Hands-on testing with representative tasks; transparent performance metrics | User satisfaction scores; task completion accuracy; time savings |

| IT/Technical Teams | Infrastructure requirements vary significantly between models; integration complexity affects deployment timelines | Technical specifications; integration support; performance benchmarks | Successful deployment; system stability; acceptable response times |

| Compliance/Risk Teams | Model reliability directly impacts regulatory exposure; output accuracy affects audit readiness | Error rate data; explainability features; control mechanisms | Compliance pass rates; audit findings; risk mitigation effectiveness |

What Actually Drives Success

Success in AI implementation depends less on selecting the “best” model and more on choosing the right model for your specific requirements. The research demonstrates that even top-performing models benefit from architectural enhancements like retrieval-augmented generation—a finding with direct implications for business deployment strategy.

🎯 Redefining Success: AI implementation success isn’t measured by deploying the highest-scoring model but by achieving reliable, auditable outcomes for your specific business processes at acceptable cost and risk levels.

Organisations that invest in structured evaluation, domain-specific testing, and context architecture design achieve measurably better outcomes than those pursuing general-purpose deployment strategies.

Strategic Recommendations for AI Model Selection

💡 Implementation Framework:

Phase 1 - Evaluation (2-3 weeks): Define business-critical use cases; create domain-specific test scenarios; evaluate 2-3 candidate models against operational requirements

Phase 2 - Architecture (2-4 weeks): Design retrieval-augmented generation system for highest-performing model; implement context management; establish human review workflows

Phase 3 - Pilot (4-6 weeks): Deploy to controlled environment with subset of users; measure performance against baseline metrics; refine based on real-world feedback

Priority Actions for Different Contexts

For Organisations Just Starting

- Define evaluation criteria: Identify 3-5 business-critical tasks AI must perform reliably; establish performance thresholds for accuracy, response time, and cost

- Create test scenarios: Develop 10-15 representative questions or tasks that mirror real operational requirements; include edge cases and complex reasoning challenges

- Pilot with constraints: Test 2-3 models using real business data in controlled environment; measure against established criteria before scaling

For Organisations Already Underway

- Audit current performance: Measure existing AI model outputs against business requirements; identify performance gaps or reliability issues

- Implement RAG architecture: Enhance models with retrieval-augmented generation using your business knowledge base; measure improvement in accuracy and relevance

- Establish review protocols: Create human-in-the-loop validation for critical outputs; track error patterns to refine prompts and context

For Advanced Implementations

- Develop custom evaluation frameworks: Build automated testing suites that assess model performance across your specific use cases; establish continuous monitoring

- Optimise context engineering: Refine prompt structures and retrieval strategies based on production data; implement dynamic context selection

- Plan model switching capability: Design architecture that enables testing alternative models without disrupting operations; maintain vendor optionality

Hidden Challenges in AI Model Deployment

Challenge 1: The Evaluation Gap

Most organisations lack structured frameworks for evaluating AI models against their specific business requirements. Vendor benchmarks focus on general capabilities whilst business success depends on performance in narrow, domain-specific contexts.

Mitigation Strategy: Develop industry-specific test cases that mirror your actual operational requirements. Create a library of 10-15 representative tasks covering routine operations, edge cases, and high-stakes scenarios. Establish quantitative thresholds for accuracy, relevance, and reliability before evaluating models. This evaluation framework becomes your decision-making foundation and ongoing performance monitoring tool.

Challenge 2: Context Architecture Neglect

Research demonstrates that retrieval-augmented generation improves all models, yet many implementations deploy models without enhanced context systems. This oversight sacrifices 20-30% potential performance improvement—the difference between mediocre and reliable AI outputs.

Mitigation Strategy: Prioritise context architecture design alongside model selection. Build retrieval-augmented generation capabilities using your business knowledge base, process documentation, and historical data. Implement dynamic context selection that provides models with relevant information for each query. This architectural investment delivers compounding returns across all AI applications.

Challenge 3: Post-Deployment Lock-In

Organisations often discover performance limitations after deploying AI models into production workflows. At this stage, switching costs become prohibitive due to integration work, staff training, and process adaptation—creating costly vendor lock-in based on incomplete evaluation.

Mitigation Strategy: Design model-agnostic architectures from the start. Abstract model-specific implementations behind standard interfaces; maintain consistent prompt engineering patterns that transfer across platforms; establish performance monitoring that enables objective comparison between models. This architectural approach preserves switching capability whilst enabling immediate deployment.

Challenge 4: The Expert Paradox

The research revealed that expert scepticism decreased after structured evaluation—but only when evaluation methods proved rigorous and transparent. Many organisations fail to build this confidence because they lack credible assessment frameworks.

Mitigation Strategy: Involve domain experts in evaluation design, not just execution. Let subject-matter specialists define success criteria, create test scenarios, and validate outputs. This collaborative approach builds confidence through ownership whilst ensuring evaluation reflects true operational requirements. Document evaluation methodology thoroughly to support both initial adoption decisions and ongoing performance validation.

Strategic Takeaways: Choosing the Right Path

The research validates a fundamental principle: AI model selection represents a strategic decision requiring the same rigour as any significant technology investment. Performance differences between models directly impact business outcomes through accuracy, reliability, cost, and risk exposure.

Three Critical Success Factors

- Evidence-based selection: Deploy structured evaluation frameworks that test models against your specific business requirements before architectural commitment

- Context architecture: Implement retrieval-augmented generation systems that enhance model performance through relevant business knowledge and operational context

- Continuous validation: Establish human review workflows and performance monitoring that detect issues before they impact business operations

Reframing Success: Beyond Model Performance

Traditional metrics focus on AI capability—accuracy percentages, reasoning benchmarks, response speeds. Strategic success metrics shift focus to business outcomes: error reduction in critical processes, time savings for expert staff, improved decision quality, and reduced operational risk.

The organisations achieving sustainable value from AI investments aren’t necessarily using the highest-performing models—they’re using the right models, enhanced with proper context architecture, validated through rigorous testing, and integrated with appropriate human oversight.

Your Next Steps: Moving from Insight to Action

Immediate Actions (This Week)

- Define 3-5 business-critical tasks where AI could deliver measurable value

- Identify current AI tools or planned implementations requiring evaluation

- Assemble cross-functional team including operations, IT, and domain experts

Strategic Priorities (This Quarter)

- Develop domain-specific evaluation framework with quantitative success criteria

- Design pilot programme testing 2-3 models against business requirements

- Establish baseline performance metrics for current processes AI will enhance

Long-term Considerations (This Year)

- Build retrieval-augmented generation architecture using business knowledge base

- Implement continuous performance monitoring with human validation workflows

- Design model-agnostic systems that preserve vendor switching capability

Related Articles

- Four Design Principles for Human-Centred AI Transformation - Framework for AI deployment balancing innovation with safety and organisational readiness

- SME AI Transformation Strategic Roadmap - Comprehensive guide to structured AI adoption addressing strategy, implementation, and measurement

- Shadow AI is a Demand Signal: Turn Rogue Usage into Enterprise Advantage - Strategic reframing demonstrating prompt engineering and validation disciplines essential for effective model deployment

This strategic analysis was developed by Resultsense, providing AI expertise by real people.