The councils that modernise their approach to data will be the ones that modernise their services fastest. That’s the central finding from groundbreaking research by the Open Data Institute (ODI) and Nortal, which assessed AI readiness across ten UK local authorities. The results reveal a fundamental truth that every organisation considering AI investment needs to understand: AI readiness is not binary, and the data that works for one application may fail completely for another.

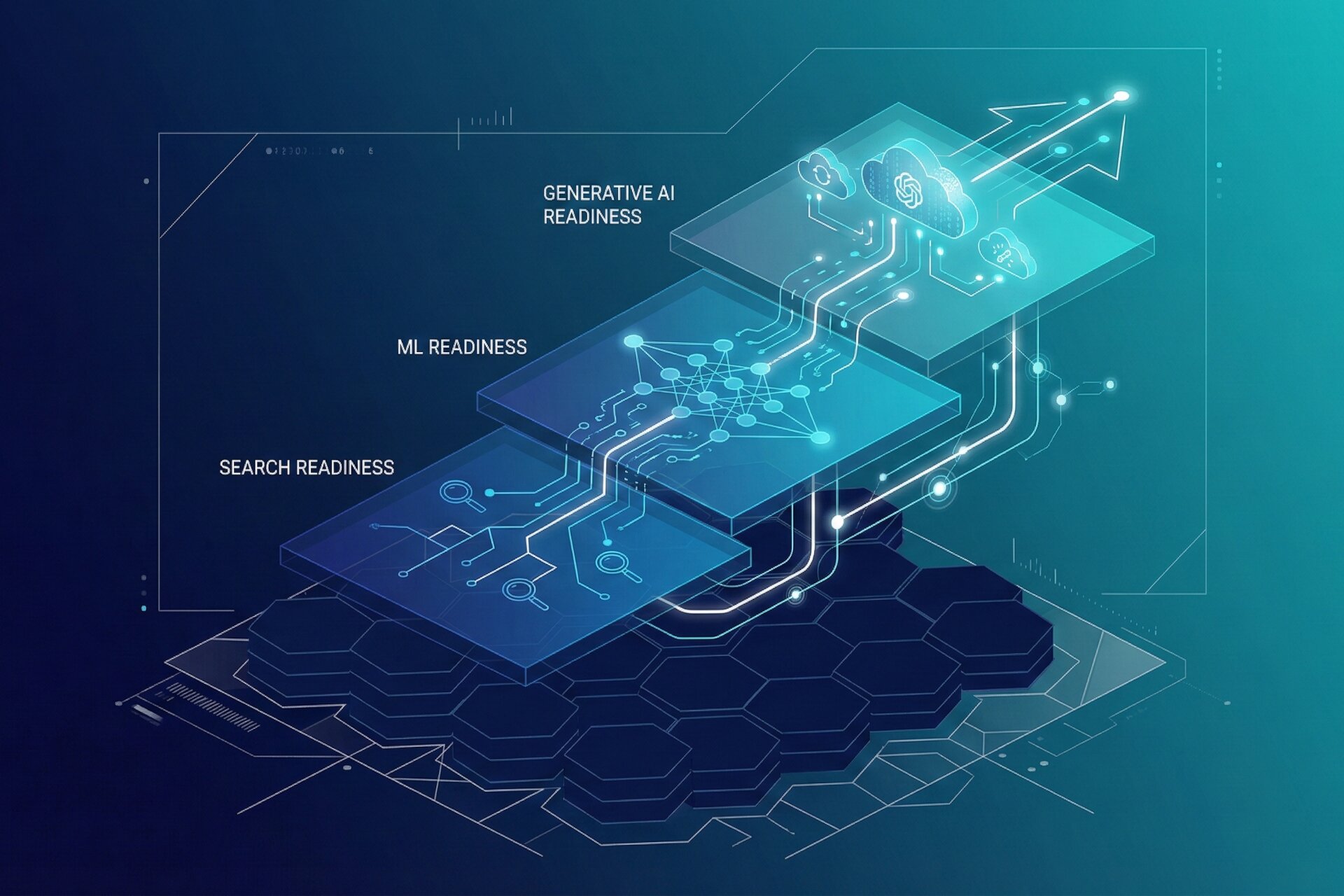

The Real Story: Three Dimensions of AI Readiness

The research challenges the common misconception that data is either “AI-ready” or it isn’t. In practice, readiness is conditional on the intended use case. A dataset optimised for financial reporting may be entirely unsuitable for predictive analytics. A search-ready database may fail completely when applied to conversational AI. This distinction has profound implications for how organisations should prioritise their AI investments.

Strategic Reality: AI is not monolithic. Search, predictive modelling, and large language models each place different demands on data—even when they share common ground in metadata, formats, and governance.

The ODI-Nortal framework identifies three distinct dimensions of AI readiness that councils must navigate:

| Readiness Dimension | Primary Purpose | Core Requirements | Business Value |

|---|---|---|---|

| Search Readiness | Enable AI-powered pattern discovery within datasets | Clean metadata, canonical identifiers, machine-readable schemas | Faster analysis, better audits, transparent access |

| ML Readiness | Support reproducible predictive modelling | Structured columnar data, bias documentation, automated pipelines | Foresight, planning, resource allocation |

| Generative AI Readiness | Enable conversational interfaces and autonomous data navigation | Rich contextual metadata, chunked text, retrieval APIs | Citizen engagement, staff efficiency, explainability |

What’s Really Happening: The Implementation Reality

The research examined ten high-impact use cases where councils are exploring or piloting AI—from predicting rent arrears in Leeds to forecasting budgets in Ealing, from vulnerability data linking in Tameside to fire risk modelling in London. The findings reveal a mixed picture with important lessons for any organisation pursuing AI adoption.

The Good News: Search and ML Readiness Are Achievable

Several councils demonstrated genuine progress. Dorset Council, with over a decade of experience using the Formulate AI tool for social care budget estimation, shows how structured datasets aligned to standards like ISO 8601 and SNOMED-CT can enable meaningful predictive capabilities. The council’s responsible AI framework and attention to ethics provide a model others can follow.

Success Factor: Dorset’s alignment to healthcare terminology standards (SNOMED-CT) enables datasets to be applicable across health and social care contexts—a critical advantage for joined-up service delivery.

Bristol City Council’s Think Family Database demonstrates how moderately prepared datasets can deliver major improvements when combined with predictive modelling. Their NEET risk-scoring model, trained on multi-agency data from education, social care, police, and welfare agencies, achieved earlier interventions and reduced workload for safeguarding staff.

The Greater London Authority’s fire incident dataset stands out nationally, with over 70 coded variables, ISO-compatible time formats, and a dedicated data dictionary for each dataset. This represents best practice in public sector data portals and shows that ML readiness translating into tangible operational outcomes is achievable.

The Challenge: Persistent Infrastructure Gaps

However, the research reveals that many councils still publish data in formats designed for reporting rather than advanced technical use. Key barriers include:

- Format Limitations: Critical indicators published in PDFs and XLSX files requiring manual extraction before analysis

- Metadata Scarcity: Machine-readable metadata is virtually non-existent across the cases studied

- Infrastructure Gaps: Missing APIs, absent version control, and limited query capabilities

- Governance Challenges: Skills gaps and unclear data ownership compound technical issues

Critical Context: Essex County Council’s energy data demonstrates how third-party data services can limit AI readiness. Despite structured consumption records, proprietary formats, limited metadata, and lack of open infrastructure constrain potential applications.

The Emerging Frontier: Generative AI Readiness Remains Distant

The most significant finding is that generative AI readiness remains the furthest away for most councils. Without contextual annotations, segmentation, and retrieval infrastructure, councils cannot yet deploy conversational tools that explain risks in plain language or connect patterns across multiple datasets.

This matters because generative AI offers the potential to transform how councils interact with citizens. Imagine a resident being able to ask “What are my options for council tax support?” and receiving a grounded, accurate answer that considers their specific circumstances. That capability requires data infrastructure that simply doesn’t exist in most local authorities today.

Strategic Analysis: The Human Factor in Data Modernisation

The research highlights a crucial insight often missed in AI discussions: AI-ready data requires AI-ready decision-making. Every aspect of data readiness—format choices, metadata practices, infrastructure investments—reflects decisions made at some point in the dataset’s lifecycle.

| Stakeholder Group | Primary Concern | Required Action |

|---|---|---|

| Council Leadership | Service modernisation, cost efficiency | Champion data standards adoption in procurement and governance |

| IT and Data Teams | Technical implementation, maintenance burden | Build automated pipelines with version control and bias monitoring |

| Frontline Staff | Practical usability, time savings | Provide training on AI tools and feedback mechanisms for improvement |

| Citizens | Service quality, transparency | Demand explainable AI outcomes and accessible information |

| Suppliers | Contract requirements, data formats | Align to open standards and provide machine-readable metadata |

Implementation Note: The decision to create a data portal, adopt a standard, or document contextual information as metadata may seem routine—but these choices propagate into whether a council can deliver AI-enhanced services to residents.

Success Criteria for AI Data Readiness

Based on the research findings, organisations should evaluate their AI readiness against these criteria:

For Search Readiness:

- Datasets use canonical identifiers (e.g., UPRNs for properties)

- Metadata is attached directly to datasets, not buried in separate documentation

- Content is structured for semantic queries, not just keyword matching

For ML Readiness:

- Data is available in columnar formats suitable for model training

- Bias and imbalance are documented and visible

- Automated pipelines exist for data refresh and model retraining

For Generative AI Readiness:

- APIs support embedding-based retrieval

- Contextual metadata enables grounding of responses

- Policy-as-code governs data retention, privacy, and safety

Strategic Recommendations: A Practical Framework for Action

The research concludes with actionable guidance that applies beyond local government to any organisation pursuing AI adoption.

Priority Actions by Organisational Maturity

For Organisations Starting Their AI Journey:

- Audit existing data assets against the three readiness dimensions

- Prioritise search readiness as the foundation—it underpins transparency and enables other applications

- Convert key datasets from PDFs and spreadsheets to machine-readable formats

- Establish basic metadata standards for all new data collection

SME Advantage: Smaller organisations can move faster than large councils. The frameworks and standards identified in this research apply regardless of scale—and implementation complexity increases with organisational size.

For Organisations with Established Data Practices:

- Implement automated pipelines for data refresh and quality monitoring

- Document bias and representativeness in metadata attached to datasets

- Enable API access for programmatic queries

- Establish version control for reproducible analysis

For Organisations Pursuing Advanced AI:

- Invest in contextual metadata that enables generative AI grounding

- Build retrieval infrastructure (vector databases, embedding-ready APIs)

- Develop policy-as-code for AI governance

- Create feedback loops between AI outputs and data improvement

The Dual-Track Data Strategy

A critical insight from the Kent and Leeds case studies is the need for two parallel data strategies:

Strategic Insight: Operational data (sensitive, real-time, multi-agency) requires robust governance, pseudonymisation, and controlled access. Strategic data (aggregated, thematic, public) must prioritise accessibility, interoperability, and reproducibility.

This distinction matters because the AI-readiness requirements differ fundamentally between these data classes. Treating all data the same leads to either excessive restriction of useful public data or inadequate protection of sensitive operational data.

The Hidden Challenges: Four Non-Obvious Barriers

1. The Standards Adoption Gap

Even when standards exist (ISO 8601 for dates, SNOMED-CT for healthcare), adoption is inconsistent. Different departments within the same council may use incompatible conventions, making data integration costly and error-prone.

Mitigation: Embed standards requirements in procurement contracts and data governance policies. Make compliance a condition of new system implementations.

2. The Third-Party Data Trap

Many councils rely on external suppliers for critical datasets. When those suppliers use proprietary formats or restrict API access, AI readiness is limited regardless of the council’s internal capabilities.

Mitigation: Negotiate open data formats and API access in vendor contracts. Require machine-readable metadata as a standard deliverable.

3. The Metadata Paradox

Creating metadata takes time and expertise that data teams often lack. Yet without metadata, the value of datasets for AI is severely constrained—creating a cycle where investment in metadata never gets prioritised.

Mitigation: Start with the most frequently used datasets. Automate metadata generation where possible. Build metadata creation into data collection workflows, not as an afterthought.

4. The Pilot-to-Production Gap

Several councils demonstrated successful AI pilots that subsequently failed to scale or were decommissioned. The gap between proof-of-concept and sustainable production systems requires infrastructure investments that pilot budgets rarely cover.

Warning: ⚠️ Ealing Council’s budget forecasting model was decommissioned within two years despite early promise—high maintenance costs and perceived inaccuracies undermined trust. The lesson: pilot success does not guarantee production viability.

Mitigation: Include infrastructure and maintenance costs in AI business cases from the start. Require clear criteria for when pilots should be scaled versus retired.

Strategic Takeaway: A Toolkit, Not a Ladder

The research fundamentally reframes AI readiness from a single maturity ladder to a toolkit of options. Search readiness, ML readiness, and generative AI readiness represent distinct dimensions of capability that serve different purposes and deliver different types of value.

Three Success Factors for AI Data Readiness

-

Match ambitions to infrastructure: Choose the readiness dimension that fits your current capability and strategic priorities. Attempting generative AI without search readiness foundations wastes resources.

-

Invest in decisions, not just technology: AI-ready data requires AI-ready decision-making across governance, procurement, and operational practices.

-

Recognise the dual-track reality: Operational and strategic data have fundamentally different requirements. Design your data strategy accordingly.

Your Next Steps Checklist

- Assess your current data assets against the three readiness dimensions

- Identify which AI applications align with your strategic priorities

- Audit metadata practices—are definitions attached to datasets or buried in documentation?

- Review vendor contracts for data format and API access provisions

- Establish governance frameworks that support AI-ready decision-making

- Build pilot-to-production pathways that include infrastructure investment

Source: Simperl, E., Majithia, N., Carey-Wilson, T., Kuldmaa, A., & Liivak, P. (2025). Insights from UK councils on standards, readiness and reform to modernise public data for AI. Nortal & Open Data Institute. Licensed under Creative Commons Attribution 4.0 International (CC BY 4.0). Access the full report

Strategic analysis by Resultsense. We help organisations navigate AI adoption with practical strategies that deliver measurable outcomes. Our AI Strategy Blueprint service provides the clarity you need to move from AI ambition to implementation, whilst our AI Risk Management Service ensures safe, confident AI use across your team.