6 Proven Lessons from AI Projects That Broke Before They Scaled

TL;DR: Analysis of failed AI proof-of-concepts reveals misaligned goals, poor planning, and unrealistic expectations cause more failures than technology quality. Common pitfalls include vague objectives, prioritising data quantity over quality, overcomplicated models, ignoring deployment realities, neglecting maintenance, and underestimating stakeholder buy-in.

The road to production-level AI deployment is littered with failed proof-of-concepts and abandoned projects. In life sciences particularly, where AI facilitates new treatments or diagnoses diseases, there’s little tolerance for iteration—even slightly inaccurate early assumptions can create concerning downstream drift.

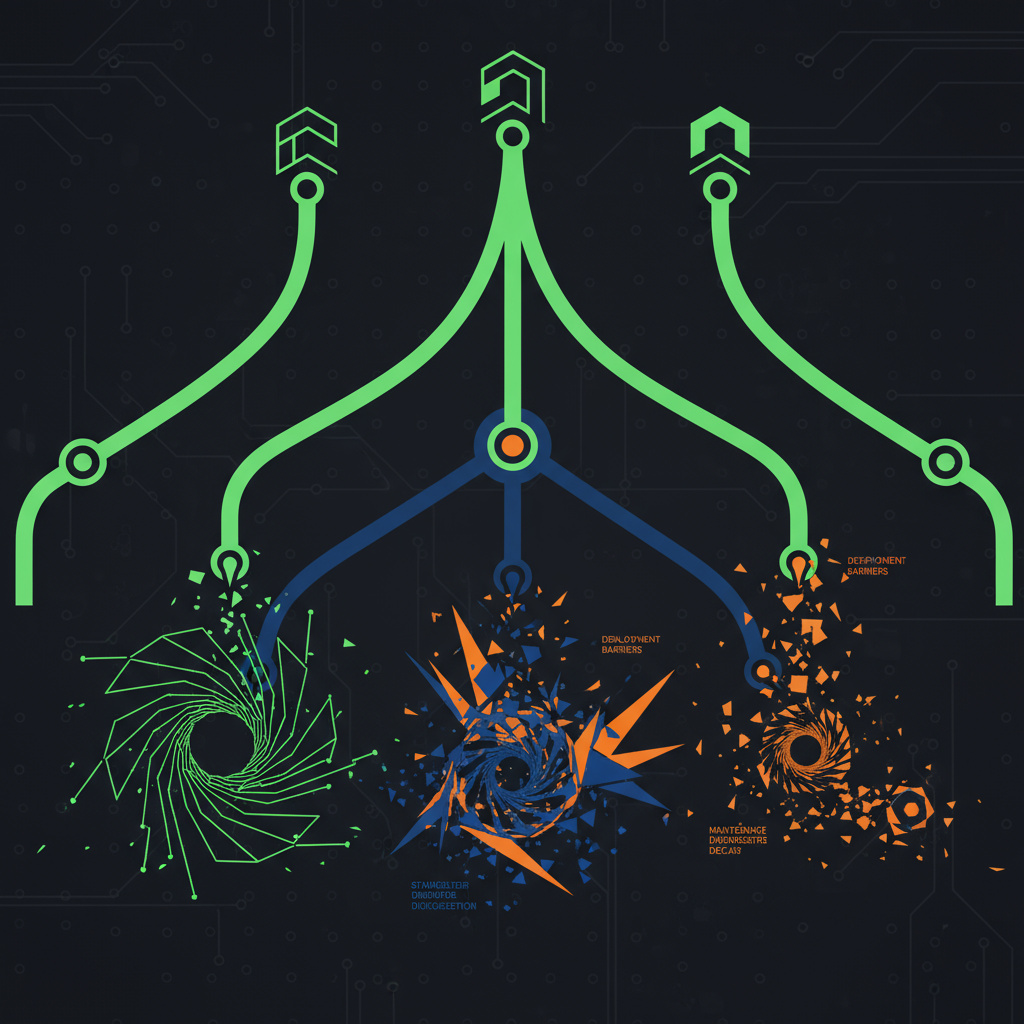

Analysing dozens of AI projects that either succeeded or failed reveals six common pitfalls. Interestingly, technology quality rarely causes failure; instead, misaligned goals, poor planning, or unrealistic expectations derail projects.

Lesson 1: Vague Vision Spells Disaster

Every AI project requires clear, measurable objectives. Without them, developers build solutions searching for problems. A pharmaceutical manufacturer’s clinical trial AI aimed to “optimise the trial process” but didn’t define whether this meant accelerating patient recruitment, reducing dropout rates, or lowering costs. The resulting model was technically sound but irrelevant to pressing operational needs.

Takeaway: Define specific, measurable objectives using SMART criteria (Specific, Measurable, Achievable, Relevant, Time-bound). Aim for “reduce equipment downtime by 15% within six months” rather than “make things better.”

Lesson 2: Data Quality Overtakes Quantity

Poor-quality data poisons AI systems. A retail client’s inventory prediction model used years of sales data riddled with inconsistencies: missing entries, duplicate records, outdated product codes. The model performed well in testing but failed in production because it learned from noisy, unreliable data.

Takeaway: Invest in data quality over volume. Use tools like Pandas for preprocessing and Great Expectations for validation to catch issues early. Conduct exploratory data analysis with visualisations to spot outliers.

Lesson 3: Overcomplicating Models Backfires

Technical complexity doesn’t guarantee better outcomes. A healthcare project initially deployed a sophisticated convolutional neural network for medical image anomaly detection. Whilst state-of-the-art, high computational costs meant weeks of training, and its “black box” nature made clinician trust difficult. A simpler random forest model matched predictive accuracy whilst being faster to train and easier to interpret—critical for clinical adoption.

Takeaway: Start simple. Use straightforward algorithms to establish baselines. Scale to complex models only when problems demand it. Prioritise explainability to build stakeholder trust.

Lesson 4: Ignoring Deployment Realities

Models excelling in Jupyter Notebooks can crash in production. An e-commerce recommendation engine couldn’t handle peak traffic because it wasn’t built with scalability in mind, choking under load and frustrating users. The oversight cost weeks of rework.

Takeaway: Plan for production from day one. Package models in Docker containers, deploy with Kubernetes for scalability. Monitor performance to catch bottlenecks early. Test under realistic conditions.

Lesson 5: Neglecting Model Maintenance

AI models aren’t set-and-forget. A financial forecasting model performed well for months until market conditions shifted. Unmonitored data drift degraded predictions, and the lack of retraining pipelines meant manual fixes. The project lost credibility before developers could recover.

Takeaway: Build for longevity. Implement data drift monitoring, automate retraining pipelines, track experiments. Incorporate active learning to prioritise labelling for uncertain predictions.

Lesson 6: Underestimating Stakeholder Buy-In

Technology doesn’t exist in vacuums. A fraud detection model was technically flawless but failed because bank employees didn’t trust it. Without clear explanations or training, they ignored alerts, rendering it useless.

Takeaway: Prioritise human-centric design. Use explainability tools to make decisions transparent. Engage stakeholders early with demos and feedback loops. Train users on interpreting and acting on AI outputs.

Looking Forward

The roadmap to success combines clear SMART goals, data quality prioritisation, simple baseline algorithms, production-ready design, robust maintenance planning, and stakeholder engagement from the outset.

These lessons highlight that AI project success depends less on cutting-edge technology and more on disciplined planning, realistic expectations, and human factors. Trust remains as critical as accuracy, and maintainability matters as much as initial performance.

Source Attribution:

- Source: VentureBeat

- Original: https://venturebeat.com/ai/6-proven-lessons-from-the-ai-projects-that-broke-before-they-scaled

- Published: 10 November 2025