TL;DR: HPE survey of 1,775 IT leaders shows 22% of organizations have operationalized AI (up from 15% last year), whilst the majority remain stuck in experimentation. Craig Partridge, HPE’s senior director of Digital Next Advisory, identifies three requirements for production AI: trust as operating principle, data-centric execution, and IT leadership capable of scaling. HPE’s four-quadrant AI factory framework (Run, RAG, Riches, Regulate) provides strategic map for different model and data ownership scenarios.

The gap between AI proof-of-concept and production value represents the defining challenge for enterprise AI maturity. Whilst training models demonstrates engineering capability, only inference—the operational layer where models flag malfunctioning machines or optimize workflows—delivers bottom-line transformation.

“The true value of AI lies in inference,” states Craig Partridge, senior director worldwide of Digital Next Advisory at HPE. “The phrase we use for this is ‘trusted AI inferencing at scale and in production.’ That’s where we think the biggest return on AI investments will come from.”

Christian Reichenbach, worldwide digital advisor at HPE, notes that whilst progress is measurable—22% of organizations have operationalized AI versus 15% previously—the majority remain in experimentation phase. Reaching production requires addressing three critical dimensions.

Trust as Prerequisite for High-Stakes AI

Trusted inference means users can rely on AI system outputs—important for marketing copy generation, critical for surgical assistance robots or autonomous vehicles. Trust foundations begin with data quality, informing Partridge’s maxim: “Bad data in equals bad inferencing out.”

Reichenbach cites the real-world cost of insufficient data quality: unreliable AI-generated content, including hallucinations, that clogs workflows and forces employees to spend significant time fact-checking. “When things go wrong, trust goes down, productivity gains are not reached, and the outcome we’re looking for is not achieved.”

Conversely, properly engineered trust in inference systems amplifies efficiency gains. Network operations teams using trusted inferencing engines gain “a 24/7 member of the team they didn’t have before,” delivering faster, more accurate, custom-tailored recommendations.

Data-Centric Shift and the AI Factory Framework

The focus has shifted from hiring data scientists and pursuing trillion-parameter models toward data engineering and architecture. “Over the past five years, what’s become more meaningful is breaking down data silos, accessing data streams, and quickly unlocking value,” says Reichenbach.

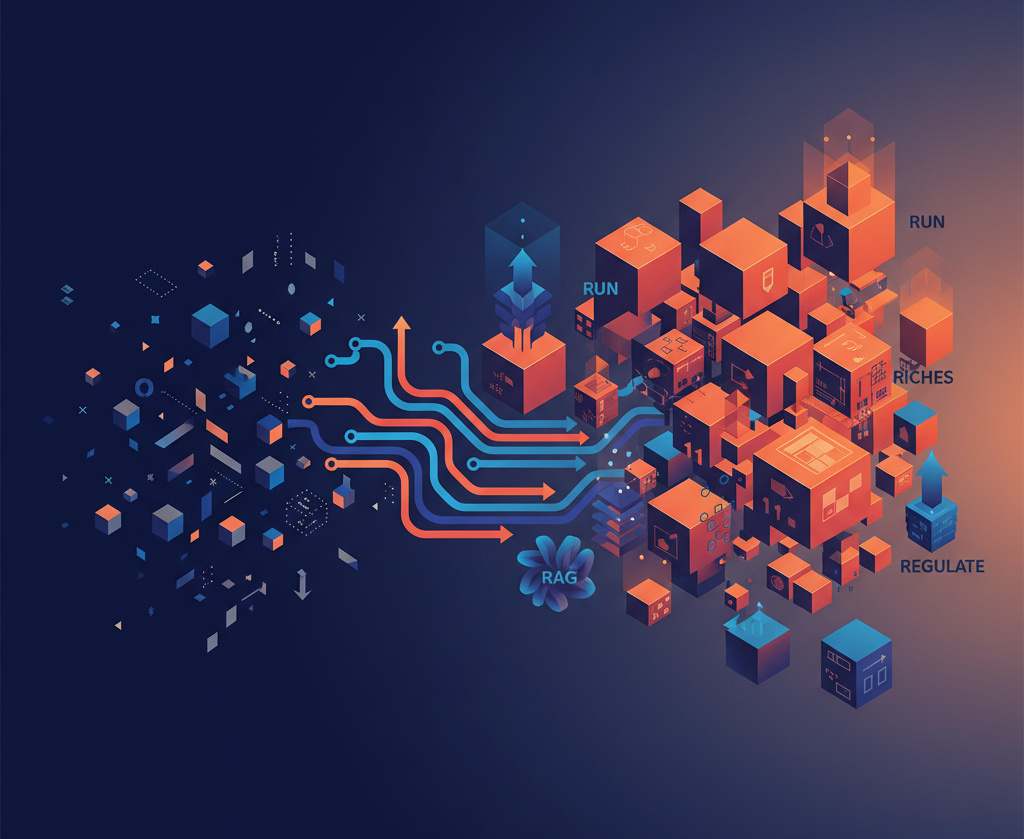

This evolution accompanies the rise of the AI factory—the always-on production line where data moves through pipelines and feedback loops to generate continuous intelligence. Strategic direction hinges on two questions: How much of the intelligence (the model) is truly yours? How much of the input (the data) is uniquely yours?

HPE’s four-quadrant AI factory framework maps these dimensions:

Run: External pretrained models accessed via API. Organizations own neither model nor data. Implementation requires strong security, governance, and center of excellence for usage decisions.

RAG (Retrieval Augmented Generation): External pretrained models combined with proprietary data. Implementation focuses on connecting data streams to inferencing capabilities through full-stack AI platforms.

Riches: Custom models trained on enterprise-owned data for unique differentiation. Requires scalable, energy-efficient environments and high-performance systems.

Regulate: Custom models trained on external data. Demands scalable setup plus enhanced legal and regulatory compliance for handling sensitive non-owned data.

Importantly, quadrants are not mutually exclusive. Most organizations—including HPE—operate across multiple quadrants simultaneously.

IT Leadership Mandate for Scale

“At scale” speaks to enterprise AI’s primary tension: what works for handful of use cases often breaks when applied organization-wide. “If you want to really see the benefits of AI, it needs to be something that everybody can engage in and that solves for many different use cases,” notes Partridge.

The challenge of scaling boutique pilots aligns directly with IT’s core competencies. History offers cautionary tale from enterprise cloud migration a decade ago: many IT departments sat out early adoption whilst business units independently deployed cloud services, creating fragmented systems, redundant spending, and security gaps requiring years to untangle.

“Shadow AI”—pilots proliferating without oversight or governance—threatens to repeat this dynamic. Rather than shut down experimentation, IT must bring structure through data platform strategy combining enterprise data with guardrails, governance framework, and accessibility. Success requires standardizing infrastructure (private cloud AI platforms), protecting data integrity, and safeguarding brand trust whilst enabling speed and flexibility.

“It comes down to knowing where you play,” summarizes Reichenbach. “When to Run external models smarter, when to apply RAG to make them more informed, where to invest to unlock Riches from your own data and models, and when to Regulate what you don’t control. The winners will be those who bring clarity to all quadrants and align technology ambition with governance and value creation.”

Source: MIT Technology Review