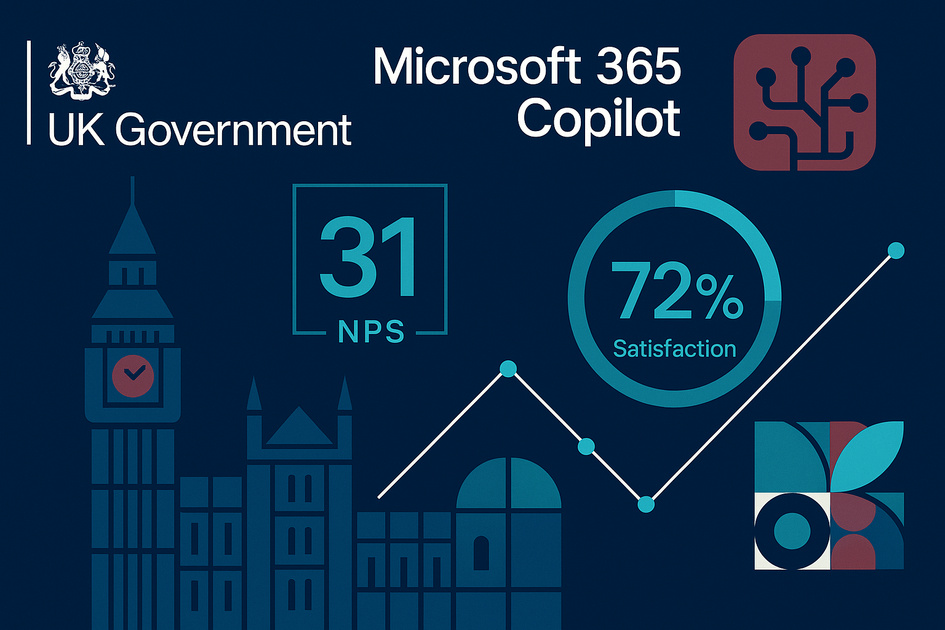

When the UK Department for Business and Trade concluded its evaluation of Microsoft 365 Copilot in March 2025, the results painted a picture far more nuanced than typical AI success stories. With 72% satisfaction rates and a respectable Net Promoter Score of 31, the evaluation might seem like another positive AI case study. But dig deeper into the data, and a more complex reality emerges—one that reveals critical insights for organisations contemplating their own AI journeys.

Key Finding: The government’s rigorous evaluation of 1,000 licences over three months offers something rare in the AI implementation landscape: honest, methodologically sound analysis that doesn’t shy away from challenges and failures alongside successes.

Context: A Government-Scale AI Experiment

The Department for Business and Trade’s evaluation ran from October 2024 to March 2025, examining Microsoft 365 Copilot deployment across a representative sample of UK-based civil servants. The active pilot usage period ran from October to December 2024, with the evaluation and analysis phase extending through to March 2025. Unlike many corporate AI pilots that rely on cherry-picked metrics, this study employed academic-rigour methodology: diary studies, controlled observations, statistical analysis, and comprehensive interviews with both users and control groups.

The scope was deliberately broad—encompassing everything from administrative tasks to complex policy work—making the findings particularly relevant for organisations considering large-scale AI deployment across diverse roles and skill levels.

What Worked: The Unexpected Success Stories

Accessibility Breakthrough

Perhaps the most significant finding wasn’t about productivity gains but about democratising workplace capability. Neurodiverse users were statistically more satisfied with Copilot than their colleagues (90% confidence level), with one participant describing it as “levelling the playing field.”

User Testimony: “It’s not revolutionary, it’s not going to change how I do my work, but it does make certain things easier… it’s made me more confident being able to do reporting… it has actually empowered me.” — User with dyslexia

Key Impact Areas:

- Neurodiverse accessibility: Enhanced confidence and capability

- Language barriers: Improved communication for non-native English speakers

- Career confidence: Transformative impact beyond simple task completion

Self-Directed Learning Triumph

Critical Insight: Users who completed at least 2+ hours of self-led training showed significantly higher satisfaction rates (99% confidence level) compared to those relying solely on formal departmental sessions.

This suggests that AI adoption success depends less on institutional training programmes and more on individuals discovering personally relevant use cases through experimentation.

Task-Specific Excellence

Productivity Gains by Task Type:

| Task Category | Time Savings | Effectiveness |

|---|---|---|

| Document drafting | 1.3 hours/task | ⭐⭐⭐⭐⭐ Excellent |

| Research summarisation | 0.8 hours/task | ⭐⭐⭐⭐⭐ Excellent |

| Presentations | Limited benefit | ⭐⭐ Poor |

| Complex scheduling | Limited benefit | ⭐⭐ Poor |

The tool excelled at the administrative burden that often drowns knowledge workers, freeing time for strategic thinking.

Critical Gaps: Where Good Intentions Met Reality

⚠️ Warning: Despite 72% satisfaction, the evaluation revealed dangerous gaps in implementation that could undermine long-term success.

Quality Assurance Vulnerabilities

The Quality Control Crisis:

- 22% of users encountered hallucinations

- Inconsistent validation practices across teams

- Variable review standards depending on output usage

- Higher risk in critical work products

Concerning Pattern: Users admitted they didn’t thoroughly review outputs when not sharing them with colleagues—creating a dangerous precedent for personal productivity tasks.

The Control Group Problem

Perhaps most troubling was the revelation that colleagues without Copilot access had minimal understanding of its capabilities, limitations, or security implications. This knowledge gap created organisational friction and limited the tool’s potential impact through reduced collaboration and support.

Environmental and Ethical Friction

An unexpected barrier emerged: environmental concerns about large language model energy consumption. Some users limited their Copilot usage due to sustainability worries, highlighting how organisational values can conflict with productivity tools in ways traditional change management rarely anticipates.

The Human Factor: Why Culture Trumps Technology

The evaluation revealed that team and management attitudes significantly influenced individual usage patterns. Users with supportive managers and colleagues engaged more deeply with the tool, whilst those facing scepticism or resistance held back—often to the detriment of their potential productivity gains.

This social dynamic suggests that successful AI implementation requires as much attention to cultural preparation as technical deployment. The technology worked; the human systems surrounding it often didn’t.

One user captured this tension: “I was probably more hesitant to use it than I should have been at certain points, worrying that the information might be sensitive”—illustrating how uncertainty about policies and cultural acceptance can undermine even willing adopters.

Strategic Recommendations for Implementation Success

💡 Implementation Framework: Eight evidence-based strategies that can dramatically improve your AI deployment success rates.

1. 🔍 Pre-Implementation Readiness Assessment

Before deploying AI tools, organisations must evaluate:

- Quality assurance culture maturity

- Policy clarity and governance readiness

- Management buy-in and cultural support

- Technical infrastructure and security frameworks

Key Insight: The government’s experience suggests these foundational elements matter more than the technology itself.

2. ♿ Accessibility-First Training Strategy

Priority User Groups for Maximum Impact:

- Neurodiverse individuals (highest satisfaction rates)

- Non-native English speakers (transformative communication benefits)

- Administrative role holders (clearest productivity gains)

Rather than generic training programmes, focus resources on users who will benefit most. Their success creates visible wins that drive broader adoption.

3. 🧪 Self-Discovery Learning Architecture

Replace traditional training with:

- Protected experimentation time (minimum 2 hours)

- Guided exploration frameworks

- Peer learning and sharing sessions

- Personal use case development workshops

Evidence: Users with 2+ hours self-directed learning showed 99% confidence level higher satisfaction than formal training attendees.

4. 📢 Comprehensive Change Communication

Address the knowledge gap systematically:

- Educate non-users about AI capabilities and limitations

- Create clear governance and security policies

- Establish support channels and escalation paths

- Build organisational AI literacy across all levels

Risk Factor: Colleagues without access became unwitting barriers to adoption through misunderstanding and concern.

5. 🎯 Task-Specific Implementation

Start Where Success is Guaranteed:

✅ High-Impact Areas: Document drafting, research summarisation, email composition

❌ Avoid Initially: Presentations, complex scheduling, nuanced policy work

Deploy AI strategically, focusing initially on written tasks where benefits are clearest and measurable.

6. 🌱 Values-Aligned Deployment

Proactively Address Ethical Concerns:

- Environmental impact of large language models

- Data privacy and security implications

- Bias and fairness in AI outputs

- Transparency in AI-assisted work

Address these concerns head-on rather than hoping they’ll be overlooked. Transparency about trade-offs builds trust and reduces resistance.

7. ✅ Continuous Quality Assurance Framework

Essential Quality Controls:

- Standardised review processes for AI outputs

- Role-based validation requirements

- Hallucination detection and reporting systems

- Regular quality audits and improvement cycles

Critical Risk: The government’s experience showed dangerous variability in how users validated AI-generated content—a risk that scales exponentially in production environments.

8. 📊 Success Metrics Beyond Satisfaction

Track What Actually Matters:

- Hallucination detection rates

- Quality assurance consistency scores

- Task-specific adoption patterns

- True productivity gains (not just time savings)

- User capability enhancement metrics

The Hidden Implementation Challenges

🚨 Reality Check: The evaluation revealed several implementation challenges that rarely appear in vendor case studies but proved critical in practice.

The Three Hidden Pitfalls

📈 The Novelty Effect

Many reported time savings came from tasks users wouldn’t normally complete—suggesting initial productivity metrics may be inflated by artificial activity rather than genuine efficiency gains.

Warning: Early success metrics might not reflect sustainable productivity improvements.

⚖️ The Skills Paradox

High-context roles (Legal, Policy) found limited benefit, whilst administrative roles saw significant value. This suggests AI deployment strategies must be carefully matched to role requirements rather than applied universally.

Role-Based Success Patterns:

- Administrative: ⭐⭐⭐⭐⭐ High value

- Technical writing: ⭐⭐⭐⭐ Good value

- Legal/Policy: ⭐⭐ Limited value

- Creative/Strategic: ⭐⭐ Mixed results

🎓 The Training Misconception

Formal training programmes showed minimal correlation with satisfaction, challenging traditional change management approaches. Success correlated with personal experimentation time—a resource many organisations struggle to provide.

Key Learning: Traditional training fails. Self-discovery succeeds.

Looking Forward: The Real Measure of AI Success

🎯 Success Redefined: AI implementation success shouldn’t be measured solely by productivity metrics or satisfaction scores. Instead, it should be evaluated on whether it enhances human capability whilst preserving the quality and integrity of work outputs.

The Three Key Takeaways

1. Beyond the Headlines 📊

The most telling finding wasn’t the 72% satisfaction rate, but the nuanced reality behind it: AI tools can deliver significant value when thoughtfully implemented with attention to human factors, cultural readiness, and ongoing quality assurance.

2. People Over Technology 👥

The Critical Question: Focus less on the technology’s capabilities and more on your organisation’s readiness to integrate it thoughtfully. The AI works—the question is whether your people and processes are prepared to make it work effectively.

3. Evidence-Based Implementation 📋

The government’s honest evaluation provides a roadmap not just for what to implement, but how to think about implementation itself. In an era of AI hype and vendor promises, such evidence-based insight is invaluable.

For leaders contemplating AI deployment: This government evaluation offers something rare—honest, methodologically sound analysis that doesn’t shy away from challenges alongside successes. Use it as your guide to navigate the genuine opportunities and challenges of artificial intelligence in the workplace.

Bottom Line: AI implementation is fundamentally a human challenge, not a technical one. Plan accordingly.

Source: UK Department for Business and Trade, “Microsoft 365 Copilot Evaluation Report”, August 2025. Download full government evaluation report (PDF)