The Strategic Inflection Point

The first week of October 2025 marks a fundamental shift in UK SME AI economics: a £3 million eBay-OpenAI programme opens zero-cost enterprise AI access to 10,000 small businesses, Microsoft bundles advanced Copilot into a $19.99 consumer tier with bring-your-own-AI capabilities, and 14 regional tech booster projects simultaneously activate with funding, mentorship, and investor networks.

Strategic Insight: The collision of sponsored access, platform convergence at £15–£20 per seat, and government-backed regional enablement creates the first genuine “try-before-you-scale” window for cash-constrained SMEs—but only if governance, skills, and measurable value frameworks exist before tools are deployed.

This convergence compresses the traditional pilot-to-production timeline from quarters to weeks, yet simultaneously exposes a critical gap: UK insurance and financial sector leaders explicitly warn that “AI is not a free ticket,” citing governance failures, cyber exposure, and value measurement deficits as primary reasons pilots stall or underperform. The opportunity isn’t tool access—it’s strategic readiness when access arrives.

The Economics of Enablement Have Fundamentally Shifted

The Real Story Behind the Headlines

Beneath the programme announcements lies a structural market transformation. eBay and OpenAI aren’t simply distributing ChatGPT Enterprise licences—they’re field-testing SME-scale administrative automation, content generation, and sales analytics playbooks with a 12-month access window designed to prove ROI before subscription economics kick in. Microsoft’s retirement of Copilot Pro in favour of Microsoft 365 Premium ($19.99/month with BYOAI into workplace tenants) eliminates the historic friction of parallel licensing and data governance splits.

Meanwhile, Scotland’s £1 million SME AI programme and England’s Regional Tech Booster network activate consultancy, grants, and capability-building outside London’s traditional innovation corridors. The unifying thread: adoption economics now favour rapid experimentation with clear exit ramps, not sunk-cost pilots.

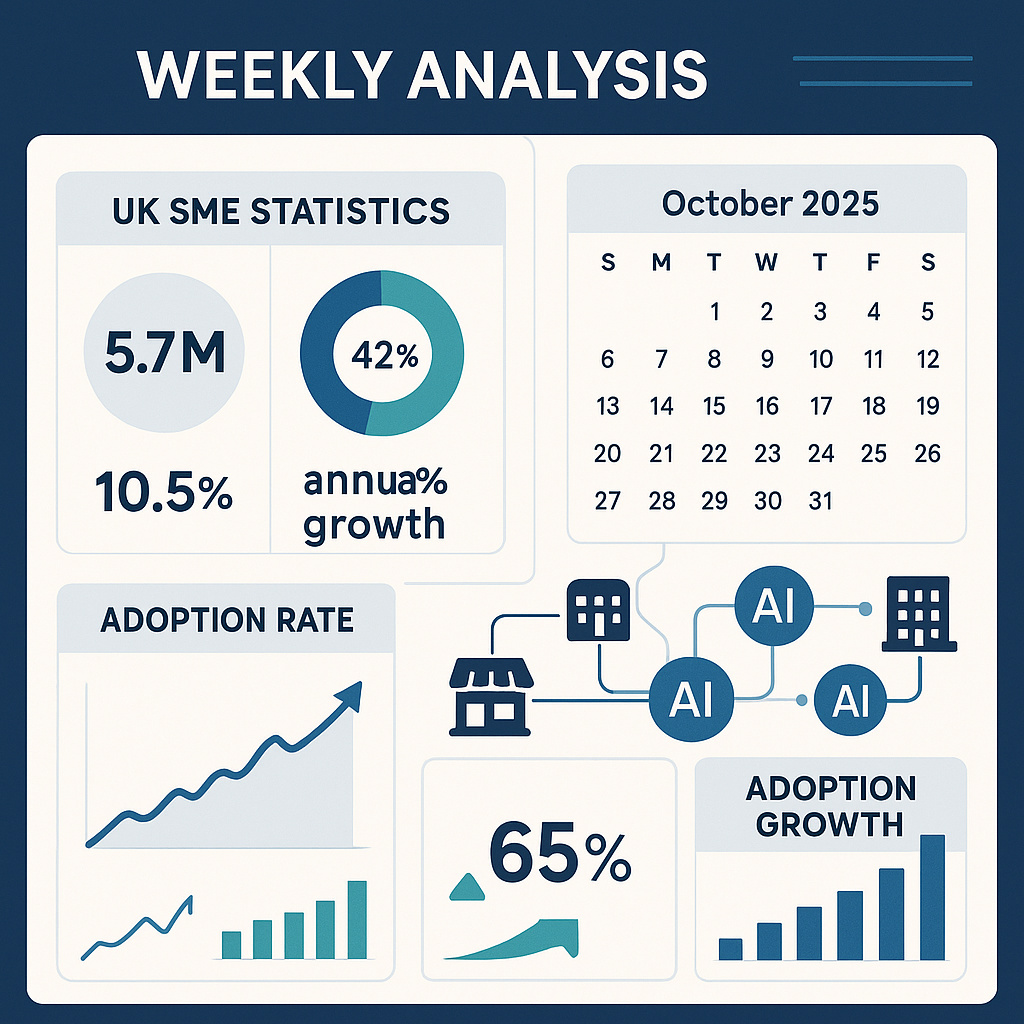

Critical Numbers: The Week’s Strategic Metrics

| Metric | Value | Strategic Implication |

|---|---|---|

| SME Programme Scale | 10,000 businesses (eBay/OpenAI) | Largest single-cohort enterprise AI deployment for UK SMEs; creates network effects and shared learning opportunities |

| Platform Price Convergence | £15–£20/user/month | Cross-vendor alignment enables like-for-like ROI comparison; reduces switching costs and increases procurement confidence |

| Regional Projects Activated | 14 Tech Booster initiatives | Signals policy shift from London-centric to distributed innovation; creates local implementation partnerships |

| Manufacturing Capability Uplift | 53 North West firms (Made Smarter) | Demonstrates structured peer learning and change leadership models transferable to other sectors |

What Strategic Readiness Actually Requires

What’s Really Happening in Implementation Reality

The tools are arriving faster than governance frameworks. UK financial services and insurance leaders specifically highlight explainability, defensibility, and measurable profitability as non-negotiable prerequisites—yet most SME pilots begin with feature exploration rather than outcome definition. The Made Smarter North West digital champions programme provides the counter-example: 53 firms progressed through facilitated peer learning, role-based training, and coached change leadership, not self-service platform trials.

Critical Insight: The organisations succeeding in October 2025 aren’t those with the most advanced models—they’re those with the clearest governance blueprints, role-specific training pathways, and quantified outcome metrics defined before tool deployment.

Success Factors Often Overlooked

- Pre-deployment data readiness audits: Knowing which data sets are AI-ready (structured, compliant, accessible) versus which require remediation before model deployment

- Role-based change leadership: Identifying digital champions within each function who can translate AI capabilities into workflow improvements, not IT-driven top-down mandates

- Vendor-agnostic integration planning: Accounting for UK feature limitations (e.g., OpenAI connectors not available in certain UK plans) and designing fallback workflows

- Governance-first architecture: Establishing explainability standards, human-in-the-loop checkpoints, and audit trails as deployment prerequisites, not post-implementation add-ons

The Implementation Reality: Platform Promises vs. Operational Constraints

Microsoft’s BYOAI into workplace tenants offers powerful flexibility—but requires existing Azure governance, data classification, and access control maturity most SMEs don’t possess. Google’s AI Pro trials compress evaluation windows but don’t inherently solve the skills gap in prompt engineering, output validation, or integration design. The eBay/OpenAI access window provides enterprise-grade tooling but assumes foundational capabilities in process mapping, data preparation, and change management that many micro-businesses lack.

⚠️ Risk Warning: Zero-cost access to advanced AI tools without parallel investment in governance frameworks, skills development, and outcome measurement infrastructure creates technical debt that surfaces as failed deployments, regulatory exposure, and organisational resistance when scaling beyond pilots.

The Human and Organisational Dimensions

Beyond the Technology: The Human Factor

The technology enablers this week—sponsored access, bundled platforms, regional funding—solve cost and availability barriers. They don’t inherently solve trust, competence, or organisational readiness deficits. UK sector leaders explicitly frame AI adoption as a governance and change leadership challenge, not a procurement decision. The Made Smarter digital champions model demonstrates this: capability uplift comes from peer networks, facilitated experimentation, and role-based coaching, not platform feature sets.

Stakeholder Impact Analysis

| Stakeholder Group | Primary Impact | Support Required | Success Metric |

|---|---|---|---|

| Finance/Operations Teams | Administrative automation, process optimisation opportunities via generative tools for documentation, reporting, analysis | Data readiness training, output validation frameworks, compliance guardrails | Time-to-complete reduction of 20–40% on repeatable tasks; error rate below baseline |

| Sales/Marketing Teams | Content generation, customer analytics, listing optimisation capabilities via accessible natural language interfaces | Prompt engineering training, brand voice alignment, ROI measurement dashboards | Conversion lift of 10–25%; content production cost reduction of 30–50% |

| Leadership/Board | Strategic decision on tool adoption, risk acceptance, investment allocation amidst governance warnings and opportunity signals | Governance blueprints, explainability standards, peer benchmarking on ROI and risk | Board-level KPI dashboard showing value capture vs. risk exposure quarterly |

| IT/Security Teams | Integration architecture, data governance, cyber risk management for BYOAI and enterprise tool deployments | Vendor-agnostic technical patterns, UK regulatory guidance, incident response playbooks | Zero data leakage incidents; integration uptime >99%; compliance audit success |

What Actually Drives Success: Redefining Metrics Beyond Technical KPIs

Traditional AI success metrics—model accuracy, inference speed, token efficiency—miss the organisational value drivers. October 2025’s successful implementations will be measured by:

- Time-to-trust: How quickly teams move from sceptical experimentation to confident operational deployment

- Governance maturity: Whether explainability, human oversight, and audit capabilities exist at deployment, not retrofitted post-incident

- Role-based competence: Percentage of users who can independently design, validate, and integrate AI outputs into their workflows

- Value realisation speed: Weeks from initial access to measurable revenue lift, cost reduction, or cycle time improvement

🎯 Success Redefined: The winning metric isn’t “AI adoption rate”—it’s “governed AI value capture within 30 days of access, with documented explainability and zero regulatory exposure.”

From Strategy to Execution

💡 Implementation Framework for October 2025 Market Conditions:

Phase 1 (Weeks 1-2): Strategic Readiness Assessment

- Data readiness audit: identify AI-ready data sets vs. remediation requirements

- Governance blueprint: define explainability standards, human checkpoints, compliance gates

- Role mapping: identify digital champions and skill gaps per function

Phase 2 (Weeks 3-4): Controlled Pilot Deployment

- Deploy sponsored access or platform trials on 2-3 revenue-linked use cases

- Establish weekly outcome measurement (conversion, cycle time, error rate)

- Run parallel governance validation (explainability, audit trail, compliance)

Phase 3 (Weeks 5-8): Scale with Guardrails

- Expand to additional use cases only after Phase 2 outcomes meet thresholds

- Operationalise digital champion peer learning networks

- Establish board-level KPI dashboard for value vs. risk tracking

Priority Actions for Different Organisational Contexts

For Organisations Just Starting (No Prior AI Deployment)

- Action 1: Secure access to eBay/OpenAI programme or Microsoft 365 Premium trial to evaluate tools at zero marginal cost before subscription commitments

- Action 2: Map 2-3 administrative or content workflows where 20-40% time savings would materially impact capacity or revenue

- Action 3: Establish basic governance framework: define which data sets are permissible for AI use, who validates outputs, and what constitutes acceptable accuracy

For Organisations Already Underway (Active Pilots or Limited Deployment)

- Action 1: Audit existing pilots against governance criteria: explainability, human oversight, documented ROI—retrofit controls where gaps exist

- Action 2: Leverage Made Smarter or Regional Tech Booster networks to access peer learning and funded consultancy for capability uplift

- Action 3: Transition from feature exploration to outcome optimisation: set 30-day improvement targets for specific business metrics (conversion, cycle time, cost)

For Advanced Implementations (Multiple Use Cases, Cross-Functional Deployment)

- Action 1: Design vendor-agnostic integration patterns that account for UK feature limitations and enable platform flexibility as economics evolve

- Action 2: Build internal digital champion networks with formal training pathways, peer learning sessions, and change leadership coaching

- Action 3: Establish board-level AI governance committee with quarterly reporting on value capture, risk exposure, and capability maturity

The Challenges No One’s Discussing

Challenge 1: Governance Debt from Free Access Windows

Problem: Sponsored programmes and free trials incentivise rapid deployment without parallel investment in governance infrastructure. When access windows close and teams scale usage, the lack of explainability standards, audit trails, and compliance frameworks becomes a bottleneck or regulatory exposure.

Mitigation Strategy: Treat the access window as a governance pilot, not just a tool pilot. Establish explainability requirements, human validation checkpoints, and documentation standards during free access, so governance scales with usage when subscription economics begin.

Challenge 2: The Skills Gap Persists Despite Tool Accessibility

Problem: BYOAI and bundled platforms reduce cost friction but don’t inherently build prompt engineering competence, output validation skills, or integration design capabilities. SMEs risk deploying accessible tools with insufficient operator capability, leading to poor outcomes and organisational resistance.

Mitigation Strategy: Allocate 30-40% of pilot phase time to role-based training, not tool configuration. Use Made Smarter-style digital champion models to build peer learning networks where internal experts coach teams on practical use cases and validation techniques.

Challenge 3: Platform Lock-In Through Integration Investment

Problem: Whilst platform pricing converges, integration patterns diverge—especially with UK feature limitations. Deep investment in Microsoft 365 Premium’s BYOAI or Google’s AI Pro workflows creates switching costs and vendor dependency that erode the apparent flexibility of tool choice.

Mitigation Strategy: Design integration architectures with abstraction layers that separate business logic from vendor-specific APIs. Document UK feature gaps and maintain fallback workflows for capabilities restricted by regional availability.

Challenge 4: ROI Measurement That Ignores Opportunity Cost

Problem: Most pilots measure success as “productivity gain from AI” without accounting for the human time, change management effort, and governance investment required to achieve that gain. Positive ROI calculations often ignore these opportunity costs, leading to scaled deployments with hidden resource drains.

Mitigation Strategy: Implement full-cost accounting for AI initiatives: track not only tool subscription costs but also human hours for training, validation, integration, and governance. Define ROI thresholds that account for total organisational investment, not just software licensing.

Strategic Imperatives for Q4 2025

The Core Value Proposition in Business Terms

The October 2025 market inflection doesn’t change the fundamental AI value drivers—process efficiency, decision quality, customer engagement—but it dramatically compresses the timeline and reduces the cost to prove those drivers. For UK SMEs, the strategic opportunity isn’t “adopt AI”—it’s “validate governed AI value capture in 4-6 weeks using funded access, then scale only what demonstrates ROI with documented explainability.”

Three Critical Success Factors

- Governance-First Deployment: Establish explainability standards, human checkpoints, and compliance gates before tool rollout, not as post-deployment retrofits

- Role-Based Capability Building: Invest in digital champion networks and peer learning that build practical skills in prompt engineering, output validation, and workflow integration

- Outcome-Driven Experimentation: Define measurable business metrics (conversion, cycle time, cost) and 30-day improvement thresholds before initiating pilots, ensuring tools serve outcomes rather than exploration

Reframing Success: From Adoption Metrics to Value Realisation

Traditional AI metrics focus on deployment breadth (users onboarded, features enabled, models in production). October 2025’s market conditions demand a fundamental reframe:

- Old metric: “AI adoption rate across teams” → New metric: “Percentage of use cases delivering ROI >2× within 60 days”

- Old metric: “Number of AI tools deployed” → New metric: “Governance maturity score: explainability, audit trail, compliance validation”

- Old metric: “Platform cost per user” → New metric: “Total cost of capability including training, validation, integration overhead”

Strategic Imperative: Success in Q4 2025 isn’t measured by how quickly you deploy AI—it’s measured by how systematically you validate governed value capture before scaling, ensuring every pound of investment drives documented business outcomes without regulatory or operational risk.

Your Next Steps: Practical Implementation Roadmap

Immediate Actions (This Week):

- Secure access to eBay/OpenAI programme, Microsoft 365 Premium trial, or Google AI Pro evaluation to begin zero-cost capability assessment

- Identify 2-3 revenue-linked or cost-critical workflows where AI could deliver 20-40% efficiency gains within 30 days

- Draft basic governance framework: define permissible data sets, output validation owners, and minimum accuracy/explainability standards

Strategic Priorities (This Quarter):

- Establish digital champion network with formal peer learning sessions, use case sharing, and role-based coaching on prompt engineering and integration

- Design vendor-agnostic integration patterns that account for UK feature limitations and enable platform flexibility as market economics evolve

- Implement full-cost ROI tracking including human time for training, validation, governance—not just software subscription costs

Long-Term Considerations (This Year):

- Build board-level AI governance committee with quarterly KPI reporting on value capture, risk exposure, capability maturity, and competitive positioning

- Develop internal training pathways that create self-sufficient AI competence across functions, reducing dependency on external consultancy for routine deployments

- Create documented playbooks for repeatable use cases (content generation, admin automation, analytics) that accelerate future deployments with proven governance

Sources:

- UK Government: Local tech ready for take-off as 14 projects supporting businesses and jobs unveiled - Regional Tech Booster programme announcement

- eBay UK Collaborates With OpenAI To Launch AI Scheme For Small Businesses - £3 million SME AI training programme

- AI Magazine: Why eBay gives 10,000 sellers ChatGPT Enterprise access - eBay/OpenAI partnership details

- Microsoft launches 365 Premium to rival ChatGPT Plus - Microsoft 365 Premium announcement

- TechRepublic: Microsoft 365 Premium News - Platform pricing and BYOAI features

- Insurance Times: AI isn’t a free ticket - Clear Group leader warns brokers - UK insurance sector AI governance warnings

- Inside Media: AI, supply chains and the new face of cyber risk - Cyber security considerations for AI adoption

- Made Smarter North West - Digital Champions programme outcomes

- Connectivity 4 IR: First wave of South East manufacturers embrace digital transformation - Made Smarter South East cohort

- UK Authority: AI Topics - Scotland’s £1 million SME AI programme

- TechUK - UK AI ethics and governance initiatives

- ChatGPT Release Notes - Regional feature availability differences

- PCMag UK: Google Bard - Google AI Pro pricing and trials

- Business Reporter: From spreadsheets to AI - the shift in professional services - Professional services AI adoption

- Advent IM: Beyond chatbots - how AI is quietly transforming UK policing and healthcare - Public sector AI implementation

This strategic analysis was developed by Resultsense, providing AI expertise by real people.