The AI evaluation challenge facing UK SMEs is deceptively simple: with 75% of AI implementations failing to deliver value and 36% of projects abandoned before completion, the difference between transformational success and costly failure lies in having a systematic evaluation framework.12

UK SME AI adoption has grown from 25% in 2024 to 35% by September 2025, yet this acceleration increases risk exposure. Rushed vendor selection typically costs UK SMEs between £15,000-£75,000 in failed implementations, with hidden costs compounding financial impact.3 Poor data quality alone drains 5.87% of annual revenue for affected organisations.4

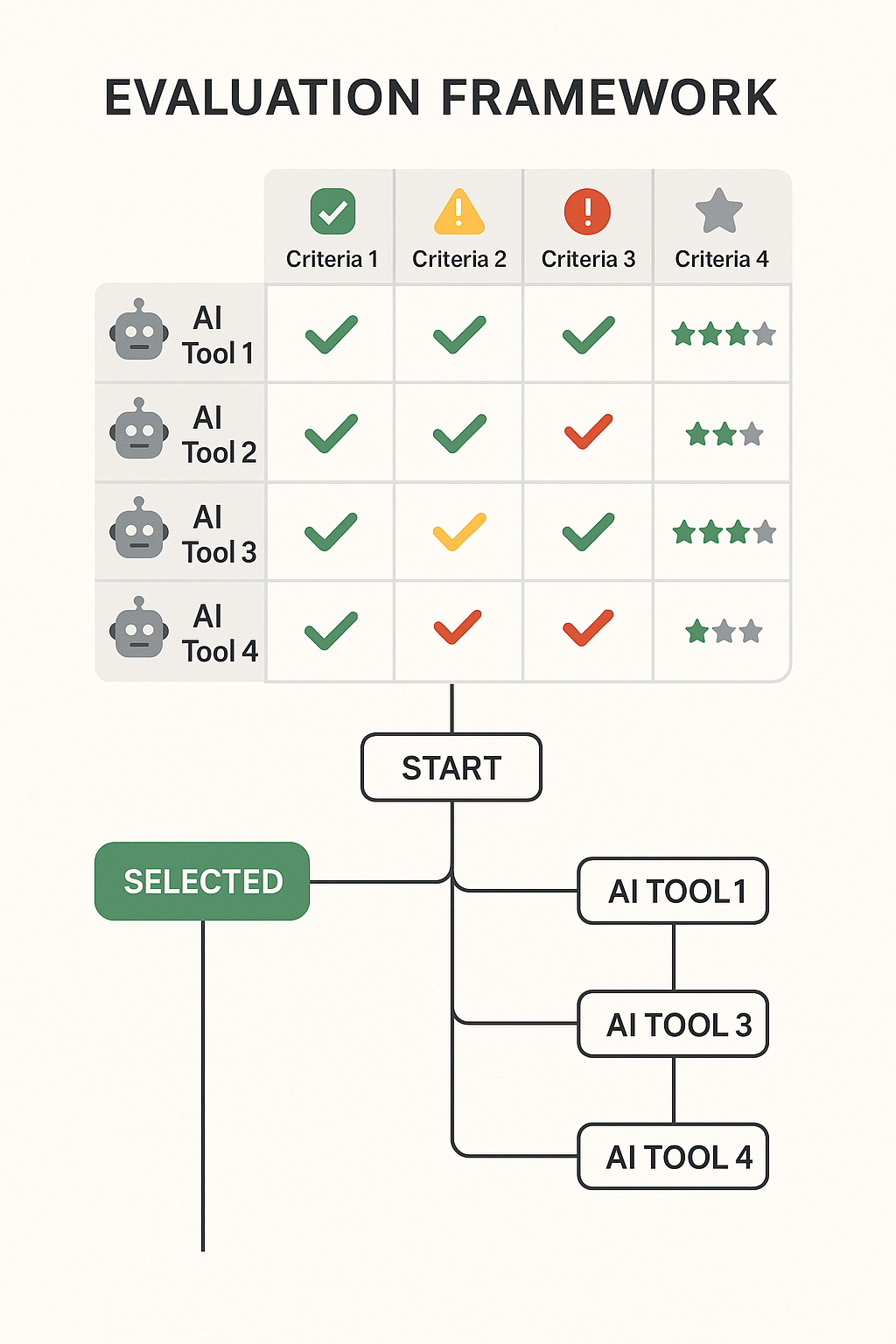

This strategic framework provides UK SME decision-makers with practical tools to evaluate, select, and implement AI solutions that align with business objectives whilst avoiding common pitfalls that derail initiatives.

The Strategic Challenge: Why Most AI Evaluations Fail

The Real Cost of Poor Selection

Failed AI implementations carry substantial financial and operational costs. Research indicates that 95% of AI projects stall at early stages, with organisations abandoning 46% of proof-of-concepts before reaching production.56 For resource-constrained SMEs, these failures can threaten business viability.

The financial burden extends beyond initial investment. Data preparation alone consumes 60-80% of project budgets, whilst integration work accounts for an additional 40-60% of total costs.7 These hidden expenses often double or triple initial vendor proposals, creating budget crises that force project abandonment.

Common Failure Patterns

Analysis of failed implementations reveals predictable patterns. Starting with technology rather than business problems appears in 85% of failed projects. Underestimating integration complexity and insufficient change management allocation compound technical challenges with organisational resistance.8

The evaluation gap creates these failures. Many SMEs lack frameworks to assess vendor capabilities, business alignment, and implementation readiness comprehensively. Without systematic evaluation, organisations select tools based on marketing claims rather than operational fit.

Framework Section 1: Business Readiness Assessment

Data Maturity Evaluation

Data maturity forms the foundation of successful AI implementation. Research demonstrates that businesses with mature data practices are 3 times more likely to achieve positive outcomes within the first year.9 SMEs must assess data quality across accuracy, completeness, and accessibility dimensions.

The evaluation should examine data consistency across systems, standardised formats, and clear governance policies. Data accessibility requires particular attention—relevant business data must be centralised and available in real-time for AI processing. Many SMEs discover they maintain data silos that prevent effective AI deployment.

Privacy and compliance considerations demand rigorous assessment under UK GDPR requirements. The framework must evaluate current data protection measures, consent management processes, and the organisation’s ability to maintain compliance when implementing AI systems processing personal data.10

Technical Infrastructure Assessment

Infrastructure readiness determines implementation feasibility. Cloud infrastructure evaluation should examine current hosting capabilities, scalability options, and security measures. SMEs must determine whether existing systems can support AI workloads or require costly upgrades.

Integration capabilities require thorough examination. Assessment should evaluate API availability, data flow mechanisms, and compatibility with existing business systems. Integration work typically accounts for 40-60% of total implementation costs, making this evaluation crucial for accurate budget planning.7

Security infrastructure must meet enhanced requirements for AI systems, including robust cybersecurity measures, access controls, and monitoring capabilities protecting AI systems and sensitive data they process.

Staff Capability and Change Management

Human capability assessment ensures organisations possess skills necessary for effective AI implementation. The Alan Turing Institute’s AI Skills for Business Competency Framework identifies three key personas: AI Citizens (basic awareness), AI Workers (operational capability), and AI Professionals (advanced competency).11

Assessment should evaluate both technical capabilities (data literacy, AI concept understanding, system familiarity) and soft skills (change management, problem-solving, communication). Successful implementations require 2-4 hours weekly leadership commitment during the first 8-12 weeks.7

Change management planning addresses human factors determining adoption success. Research indicates companies investing 70% of AI budgets in people and processes, with only 30% on technology, achieve significantly better outcomes.7 Individual team members require 4-16 weeks to become productive with AI tools, necessitating structured training programmes.

Framework Section 2: Strategic Alignment

Mapping AI to Business Objectives

Strategic evaluation begins with clearly defined business objectives, not available AI technologies. Successful SMEs start with specific challenges and evaluate AI’s potential contribution to solving them, preventing the common mistake of implementing solutions without clear business justification.12

Business objective mapping should focus on measurable outcomes. UK SMEs report successful implementation in customer service automation (achieving returns within 4-8 months), content generation (delivering improvements in 3-6 months), and process automation (showing returns within 6-12 months).7

The framework should prioritise objectives aligning with proven AI capabilities. Customer relationship management, inventory optimisation, and financial forecasting represent areas where AI demonstrates consistent value. More experimental applications require cautious approach given SME resource constraints.

ROI Calculation Methodology

Effective ROI calculation requires comprehensive cost and benefit analysis tailored to SME operations. Total Cost of Ownership (TCO) must include software licensing (30-50% of costs), integration and data work (40-60%), training and change management (10-20%), and ongoing operations (15-20% of annual time).7

Benefit quantification should focus on measurable impacts. Time savings represent the most tangible benefit, with successful implementations typically saving 20-50 hours monthly. Revenue growth through improved customer engagement, cost reductions from automation, and error reduction provide additional quantifiable benefits.13

SMEs should plan for the “productivity J-curve” where initial productivity may decline as teams learn new workflows before realising efficiency gains. This pattern lasts 3-6 months but is followed by measurable improvements for organisations persisting through the learning period.7

Timeline Expectations

Implementation timelines vary significantly based on solution complexity and business readiness. Quick wins typically requiring 6-12 months include customer service automation, document processing, and sales enablement tools. These applications provide early validation and build organisational confidence.7

Medium-term implementations spanning 9-18 months include predictive analytics systems, custom applications, and complex integration projects. SMEs should avoid unrealistic timeline expectations contributing to project failure. Vendors promising “full deployment in 30 days” for complex use cases typically deliver subpar results.

The phased approach proves most effective. Starting with pilot projects (£5,000-£20,000 investment) allows validation before committing to larger implementations. This approach reduces risk whilst building internal capabilities and stakeholder confidence.7

Framework Section 3: Vendor Evaluation

Critical Assessment Criteria

SME vendor evaluation requires criteria tailored to smaller organisation needs. Unlike enterprise buyers prioritising cutting-edge capabilities, SMEs should emphasise vendor stability, implementation simplicity, and ongoing support quality.

Financial stability assessment ensures vendor longevity. SMEs should evaluate funding history, customer growth rates, and revenue sustainability. Vendor failure leaves SMEs with substantial sunk costs and operational disruption.

Technical capability evaluation should focus on proven solutions rather than experimental features. SMEs should request case studies from similar-sized businesses in comparable sectors, performance metrics from existing implementations, and references from current customers. Vendors unable to provide this evidence may lack maturity required for successful partnerships.

Red Flags in Vendor Claims

Several warning signs indicate potential implementation risks. Overpromising represents the most common red flag, particularly claims of “solving all problems instantly” or “revolutionising operations overnight”. Realistic vendors acknowledge limitations and provide specific use cases with measurable outcomes.14

Lack of transparency around technology, data usage, or pricing structures indicates potential hidden costs or technical limitations. Vendors should provide clear explanations of how AI works, data requirements, and detailed pricing structures including ongoing costs.

Absence of failure case discussions suggests vendor immaturity. Experienced vendors acknowledge challenges and provide examples of how they address implementation difficulties. Unrealistic timeline promises represent another significant red flag—complex implementations requiring data preparation, system integration, and user training cannot complete in extremely short timeframes.14

Critical Questions for Vendors

Strategic questioning helps SMEs identify suitable partners whilst avoiding problematic providers. Data requirements questioning should establish specific volume, quality, and format needs: “What specific data do you require?” “How do you handle data quality issues?” “What data preparation work is required on our side?”

Integration questioning should identify technical and resource requirements: “Which systems do you integrate with natively?” “What custom integration work is required?” “Who performs integration and at what cost?” “How long does typical integration take for similar businesses?”

Support and training questioning establishes ongoing partnership expectations: “What training is provided?” “What support is available post-implementation?” “What are typical response times?” “Do you have UK-based support staff?”

Performance questioning validates claims with specific metrics: “Can you provide specific ROI examples from similar clients?” “What performance metrics do you track?” “Can you provide references from similar-sized UK businesses?” “What happens if performance targets aren’t met?”

Proof of Concept Best Practices

Proof of concept development provides validation before full commitment. SMEs should structure POCs to test both technical functionality and business value within realistic operational constraints.

POC scope should focus on specific business problems rather than general capabilities. Successful POCs address clearly defined challenges with measurable success criteria, narrow enough to complete within 30-90 days whilst broad enough to demonstrate meaningful value.

Data and system access requires careful planning. SMEs should provide representative data samples whilst maintaining security requirements. The POC environment should mirror production conditions as closely as possible to ensure realistic performance assessment.

Framework Section 4: Technical Due Diligence

Security and Data Privacy Requirements

UK SMEs implementing AI systems must navigate complex data protection requirements under UK GDPR, which applies identical principles to EU GDPR with specific UK enforcement mechanisms.10 Technical evaluation must assess AI systems’ ability to maintain compliance whilst delivering business value.

Data Processing Impact Assessment (DPIA) requirements mandate thorough evaluation for high-risk AI implementations. UK GDPR considers AI high-risk technology requiring mandatory DPIA completion examining data collection methods, processing purposes, retention periods, and rights protection measures.15

Technical safeguards assessment ensures AI systems implement appropriate data protection measures including encryption for data in transit and at rest, access controls limiting exposure, automated retention management, and audit logging for compliance monitoring.

Scalability Considerations

Scalability assessment ensures AI systems accommodate business growth without requiring complete reimplementation. Growing SMEs face particular challenges as data volumes, user counts, and processing requirements increase.

Processing capacity evaluation should examine AI systems’ ability to handle increasing data volumes and computational demands. Assessment must consider current baseline requirements and projected growth over 3-5 years. Systems requiring expensive infrastructure upgrades or complete replacement create unsustainable cost structures.

User scalability assessment examines licensing models and system architecture’s ability to accommodate growing teams. Many AI vendors use per-user pricing that becomes prohibitively expensive as organisations expand. Evaluation should project total costs across realistic growth scenarios.

API and Integration Capabilities

API assessment determines AI systems’ ability to integrate with existing infrastructure and accommodate custom requirements. Robust API capabilities enable SMEs to create seamless workflows between AI systems and other applications.

API documentation quality provides insight into integration complexity and vendor support quality. Comprehensive documentation with clear examples, authentication procedures, and error handling guidance indicates mature vendor capabilities. Poor documentation suggests difficult integration processes and potential support challenges.

Real-time versus batch processing capabilities determine workflow integration options. SMEs requiring immediate AI processing need real-time API capabilities, whilst others may accommodate batch processing for efficiency. Evaluation should align API capabilities with specific business requirements.

Data Portability and Vendor Lock-in Risks

Data portability assessment ensures SMEs can migrate data and maintain business continuity if vendor relationships terminate. Vendor lock-in represents significant risk for resource-constrained SMEs who cannot easily absorb migration costs or business disruption.

Data export capabilities evaluation examines vendors’ ability to provide complete data exports in standard formats. Assessment should determine whether SMEs can access raw data, processed results, trained AI models, and system configurations. Vendors restricting data export create dependency relationships limiting future flexibility.

Standard format support enables easier migration to alternative systems. AI vendors using proprietary data formats create migration barriers increasing switching costs and reducing negotiating leverage. Evaluation should assess compatibility with industry-standard formats.

Framework Section 5: Cost-Benefit Analysis

Total Cost of Ownership Calculation

Comprehensive TCO calculation prevents budget surprises plaguing many SME implementations. Software licensing represents only 30-50% of total costs, with remaining expenses often underestimated or overlooked entirely.7

Direct costs include licensing, infrastructure, cloud services, and professional services. Integration costs typically represent the largest category, accounting for 40-60% of total budgets including system integration development, data migration, API development, and workflow redesigning.7

Training and change management costs encompass staff training, process documentation, change management consulting, and productivity loss during transition. Ongoing operational costs include maintenance, support subscriptions, cloud infrastructure scaling, and continuous improvement activities.7

Hidden Costs Often Overlooked

Data preparation and management represent the most significant hidden category, typically consuming 60-80% of project budgets. This includes data cleaning, standardisation, integration, and ongoing quality management.4

Compliance and regulatory costs add 20-40% to project budgets for organisations handling regulated data, including privacy impact assessments, security audits, compliance consulting, and ongoing monitoring systems.16

Infrastructure scaling costs emerge as AI usage grows. Cloud processing fees, storage costs, and bandwidth charges increase with system usage and can substantially exceed initial projections. Staff productivity loss during adoption creates indirect costs impacting business performance, with research indicating 10-20% productivity decline during the first 3-6 months.16

Value Measurement Beyond Cost Savings

Strategic value measurement encompasses benefits extending beyond immediate cost reductions. Revenue growth opportunities include improved customer engagement, enhanced product offerings, new service capabilities, and market expansion possibilities. AI-powered personalisation can increase conversion rates by 20-25%.17

Competitive positioning improvements include faster decision-making capabilities, enhanced customer service quality, operational agility, and innovation capacity. Risk reduction benefits include improved compliance monitoring, enhanced security capabilities, reduced human error rates, and better business continuity planning.

Employee satisfaction improvements include reduced manual work burdens, enhanced decision-making support, improved work-life balance, and increased job satisfaction. Higher satisfaction reduces turnover costs and improves overall business performance.

Implementation Roadmap: 90-Day Evaluation Timeline

Phase 1 (Days 1-30): Requirements Definition

Week 1-2 focuses on internal assessment including business objective clarification, current process documentation, data inventory completion, and stakeholder requirement gathering. This foundation ensures AI evaluation aligns with specific business needs rather than generic technology capabilities.

Week 3-4 involves market research including vendor identification, initial capability screening, budget parameter establishment, and preliminary vendor outreach. This phase creates a focused vendor shortlist based on SME-specific criteria and eliminates unsuitable options early.

Phase 2 (Days 31-60): Vendor Evaluation

Week 5-6 encompasses detailed vendor evaluation including capability demonstrations, reference checks, security assessments, and integration requirement analysis. Vendors should provide specific case studies, performance metrics, and implementation timelines relevant to SME requirements.

Week 7-8 involves proof-of-concept development with shortlisted vendors, detailed cost analysis, contract term review, and stakeholder feedback collection. POCs should address real business scenarios with measurable success criteria rather than generic functionality demonstrations.

Phase 3 (Days 61-90): Final Selection

Week 9-10 includes final vendor comparison, ROI validation, risk assessment completion, and stakeholder alignment confirmation. This phase should produce clear vendor ranking with documented rationale for selection decisions.

Week 11-12 focuses on contract negotiation, implementation planning, resource allocation, and change management preparation. This phase establishes the foundation for successful AI deployment whilst ensuring adequate preparation for organisational change.

Actionable Takeaways for UK SMEs

Immediate Actions (This Week)

- Assess current AI readiness across data maturity, technical infrastructure, and staff capabilities using this framework’s assessment criteria

- Identify 3-5 potential use cases focusing on repetitive, high-volume tasks with measurable outcomes and clear business value

- Establish evaluation team including representatives from operations, IT, finance, and affected business units

Strategic Priorities (This Quarter)

- Conduct data maturity audit examining data quality, accessibility, and compliance capabilities before vendor evaluation begins

- Develop AI governance framework addressing acceptable use, data protection requirements, and human oversight protocols

- Create vendor evaluation scorecard based on this framework’s criteria weighted by business-specific priorities

- Execute 90-day evaluation timeline systematically assessing shortlisted vendors through demonstrations, references, and proof-of-concepts

- Establish baseline measurements for priority use cases enabling accurate ROI tracking and implementation success validation

Long-Term Considerations (This Year)

- Build internal AI capability through training programmes or external expertise partnerships reducing vendor dependency

- Implement phased approach starting with pilot projects validating value before committing to larger implementations

- Establish regular governance reviews adapting to evolving regulatory requirements and emerging business needs

- Create knowledge-sharing mechanisms documenting lessons learned and successful practices for organisational learning

- Develop continuous improvement processes regularly optimising AI implementations based on performance metrics and user feedback

Strategic Conclusion

The systematic evaluation framework presented here provides UK SMEs with tools necessary to navigate AI adoption successfully whilst avoiding pitfalls causing most implementations to fail. Success depends not on selecting the most advanced AI technology, but on choosing solutions that align with business objectives, organisational capabilities, and strategic goals.

Effective AI evaluation lies in thorough preparation, systematic vendor assessment, and realistic implementation planning. SMEs investing time in comprehensive evaluation, maintaining focus on proven applications, and preparing adequately for organisational change position themselves for sustainable competitive advantage through AI adoption.

As AI technology continues evolving rapidly, the frameworks and principles outlined here provide stable foundations for ongoing success. SMEs implementing these systematic approaches to AI evaluation and selection will be well-positioned to capitalise on AI opportunities whilst avoiding costly mistakes plaguing hasty or inadequately planned implementations.

The future belongs to organisations effectively harnessing AI capabilities whilst maintaining the human judgement, customer relationships, and operational excellence defining successful SMEs. This framework provides the roadmap for achieving that balance through informed decision-making and strategic AI adoption.

This strategic framework was developed by Resultsense, providing AI expertise by real people. For tailored AI evaluation guidance and implementation support, book a consultation.

Footnotes

-

https://www.arcsystems.co.uk/news/why-95-of-ai-projects-fail-and-what-you-can-do-about-it/ ↩

-

https://www.cfodive.com/news/AI-project-fail-data-SPGlobal/742784/ ↩

-

https://www.vistapartners.co.uk/news/business-news/archive/article/2025/September/more-smes-unlocking-ai ↩

-

https://quaylogic.com/the-cost-of-bad-data-why-data-quality-matters-for-the-uks-ai-future-and-how-to-fix-it/ ↩ ↩2

-

https://www.arcsystems.co.uk/news/why-95-of-ai-projects-fail-and-what-you-can-do-about-it/ ↩

-

https://www.cfodive.com/news/AI-project-fail-data-SPGlobal/742784/ ↩

-

https://www.gigcmo.com/blog/the-real-cost-of-ai-implementation-for-smes-gigcmo ↩ ↩2 ↩3 ↩4 ↩5 ↩6 ↩7 ↩8 ↩9 ↩10 ↩11 ↩12

-

https://rjj-software.co.uk/blog/why-smes-fail-at-ai-implementation-and-how-to-succeed/ ↩

-

https://www.ncs-london.com/blog/ai-readiness-assessment-guide/ ↩

-

https://laceyssolicitors.co.uk/does-gdpr-apply-to-ai-a-guide-for-uk-business-owners/ ↩ ↩2

-

https://www.turing.ac.uk/sites/default/files/2023-11/final_bridgeai_framework.pdf ↩

-

https://rjj-software.co.uk/blog/why-smes-fail-at-ai-implementation-and-how-to-succeed/ ↩

-

https://www.ncs-london.com/blog/how-to-measure-roi-from-ai-solutions-metrics-every-smb-should-track/ ↩

-

https://www.magentic.in/blogs/5-red-flags-when-picking-an-ai-vendor ↩ ↩2

-

https://dataprotectionpeople.com/resource-centre/how-to-ensure-gdpr-compliance-when-using-ai/ ↩

-

https://tardigradetechnology.com/blog/ai-cost-benefit-analysis-small-business/ ↩ ↩2

-

https://www.activdev.com/en/artificial-intelligence-for-smes-case-studies-examples/ ↩