From Experimentation to Advantage: Fixing Legal AI’s Culture Gap

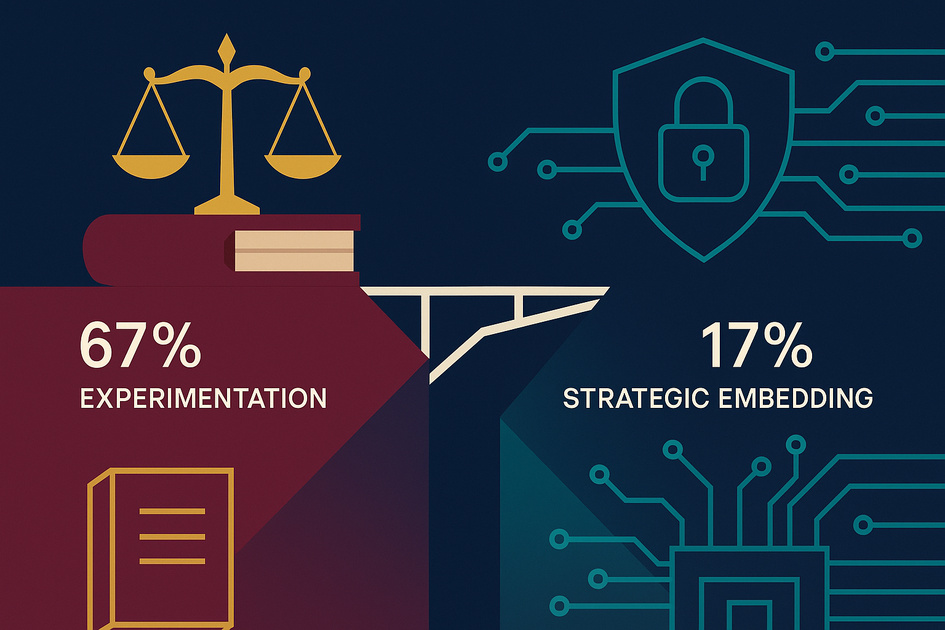

🎯 The 67% Paradox: Why Legal AI Usage Outpaces Strategic Integration

Two-thirds of UK legal professionals now use generative AI at work, yet only 17% report AI is embedded in firm strategy and operations.

This paradox—high usage but low institutional confidence—signals a strategic inflection point where governance, culture, and billing models must catch up to usage to unlock sustainable value.

Key Finding: The binding constraint on legal AI value creation is not access to tools but organisational culture, trust architecture, and monetisation models.

This analysis decodes the numbers behind the headlines and translates them into an execution playbook that balances speed, safety, and measurable commercial impact—grounded in practitioner data, not vendor gloss.

📊 The Culture-Technology Lag: Why Legal AI Adoption Stalls

A new survey of 700+ UK legal professionals finds adoption has accelerated from 46% in January 2025 to 61% using generative AI by late summer, with “no plans” dropping from 15% to 6%, indicating mainstreaming rather than fringe experimentation.

Yet the institutionalisation gap is stark: only 17% say AI is fully embedded, and roughly two‑thirds characterise their organisation’s AI culture as slow or non-existent, pointing to governance and enablement shortfalls rather than tooling deficits.

Nearly half of practitioners still rely on general-purpose AI rather than legal-specific tools, amplifying perceived risk and undermining trust in outputs—a solvable design and enablement problem, not an adoption inevitability.

The Real Story Behind the Headlines

Surface-level “two-thirds use AI” hides usage quality, monetisation readiness, and trust disparities that determine whether AI creates margin or merely creates risk.

The most common reality is “experimenting but progress is slow,” which stalls compounding returns and encourages shadow AI habits, widening the gap between early performance gains and firm-wide operating model change.

Critical Numbers That Matter:

| Metric | Reported Value | Strategic Implication |

|---|---|---|

| Active GenAI users | 61% of UK legal professionals | Value hinges on moving from tool exposure to measurable, policy-compliant workflows |

| Strategy embedding | 17% say AI is fully embedded | Without ownership, incentives, and standards, benefits remain local and fragile |

| Tool choice split | 51% legal AI vs 49% general AI | Trust and quality rise with curated legal sources; governance should steer choices |

🔍 Deep Dive: Converting Adoption into Confidence

Adoption at scale without confidence is a liability; the data shows confidence rises when outputs are grounded in vetted legal sources and enterprise guardrails, not when usage grows indiscriminately.

Critical Insight: Tool selection is a trust decision first and a features decision second; legal‑specific models and content pipelines measurably increase user confidence and reduce remediation costs.

What’s Really Happening

Two usage curves are diverging: legal-specific AI with curated sources is building trust and measurable returns, while general-purpose AI cultivates speed but also hallucination, leakage, and rework risk that erodes net value.

The centre of gravity remains “experiment, slowly,” which caps learning rates and blocks the feedback loops needed to harden policies, prompts, and playbooks for consistent matter outcomes.

The result is shadow AI and uneven quality—predictable when incentives favour speed but governance is lagging—leading to brittle wins rather than a scalable operating advantage.

Success Factors Often Overlooked:

- Trust architecture by design: Curated sources, evaluation sets, and review protocols drive confidence (88% report higher trust using legal AI), which directly reduces downstream remediation costs.

- Billing model readiness: With 47% expecting AI to transform billing, pricing, scoping, and value articulation need redesign to capture, not cannibalise, efficiency gains.

- Enablement time and norms: “Experimenting but slow” reflects insufficient protected time and role-based prompts/playbooks, not lack of interest or tools.

The Implementation Reality

Despite rising usage, only a small subset report heavy day-to-day use, indicating fragmented workflows and unclear criteria for when AI is “safe to use” versus “nice to try.”

Firms over-index on procurement and under-index on operationalisation—prompt libraries, matter taxonomies, and review standards—so value remains idiosyncratic rather than systemic.

⚠️ Warning: Without explicit controls, general AI usage magnifies two top-cited risks—fabrication/inaccuracy and confidentiality leakage—undermining client trust and regulatory posture.

💡 Strategic Analysis: Monetisation, Talent, and Operating Model

The commercial signal is clear: over half of users channel time saved into billable work, and nearly as many into wellbeing, but pricing, staffing, and client communication must evolve to avoid a “faster-for-less” trap.

Beyond the Technology: The Human Factor

Associates prioritise billable hours over wellbeing while partners and GCs anticipate billing model change—an alignment challenge requiring narrative, metrics, and incentives that value accuracy and client outcomes, not just speed.

A tangible retention risk emerges: 18% would consider leaving if firms underinvest in AI (rising to 26% in large firms), making enablement and visible progress a talent brand issue, not just a tech roadmap.

Confidence differentials reflect enablement quality: legal-specific tools, training, and review protocols raise trust and reduce rework, validating a “guardrails-first” rollout strategy.

Stakeholder Impact Assessment:

| Stakeholder Group | Primary Impact | Required Support | Success Metrics |

|---|---|---|---|

| Executive Leadership | Margin shape shifts as efficiency meets pricing pressure | Billing model redesign; risk appetite and policy clarity | Matter gross margin; AI-attributed revenue; risk incidents |

| Middle Management | Throughput up but review burdens shift to supervisors | Playbooks, review standards, and workload rebalance | Rework rate; review cycle time; utilisation stability |

| Front-line Teams | Speed gains; uneven trust without curated sources | Role-based prompts, evaluation sets, coaching time | First-pass accuracy; time-to-draft; escalation rate |

| Customers/Users | Expectations move to “faster and surer” outcomes | Transparent scope, QA checkpoints, price architecture | Net promoter; variance to estimate; quality issues |

What Actually Drives Success

Trustable inputs and reviewable outputs beat raw speed; firms that standardise on legal-specific models and measurable review protocols convert productivity into lower risk and higher margin.

Monetisation depends on pricing architecture, not just time saved; firms anticipating billing transformation move faster from pilot gains to portfolio impact.

🎯 Success Redefined: Measure “safe automation share” per matter—percentage of work executed with verified prompts, curated sources, and documented review—alongside margin and client outcome, not just hours saved.

🚀 Bridging the Gap: Your Cultural Transformation Playbook

Based on our analysis, here’s a practical implementation framework for organisations considering similar initiatives:

💡 Implementation Framework

Phase 1: Foundation (Months 1-3)

- Establish trust architecture: curate legal sources, define review standards, and set redline policies for confidential data.

- Build role-based prompt libraries and evaluation sets for top five matter types by revenue.

- Redesign billing guardrails (fixed, subscription, outcome-based) for AI-influenced work scopes.

Phase 2: Pilot & Learn (Months 4-6)

- Run controlled pilots on high-volume, low-risk workflows with paired human-in-the-loop review.

- Instrument metrics: first-pass accuracy, rework, safe automation share, matter margin variance.

- Launch a visible enablement programme: office hours, playbooks, and manager coaching.

Phase 3: Scale & Optimise (Months 7-12)

- Scale by matter family; enforce usage standards and deprecate unsafe general AI patterns.

- Introduce adaptive pricing tied to AI-enabled scope decomposition and outcome metrics.

- Institutionalise continuous evaluation: quarterly prompt/playbook refresh and audit.

Priority Actions for Different Contexts

For Organisations Just Starting:

- Select a legal-specific AI and lock a curated content pipeline before expanding access.

- Define what “approved use” means per workflow and build minimal review checklists.

- Avoid uncontrolled general AI exposure that creates leakage and hallucination risks.

For Organisations Already Underway:

- Consolidate to standard prompts/playbooks and track safe automation share per matter.

- Pilot new billing constructs on AI-impacted scopes to preserve margin.

- Reassess risk posture: refresh confidentiality and client consent guidance.

For Advanced Implementations:

- Optimise evaluation with gold sets and regression tests for prompts and models.

- Add retrieval-augmented generation with firm knowledge and vetted precedents.

- Pivot from time to value metrics—cycle time, outcome variance, and client impact.

⚠️ Hidden Challenges Nobody Talks About

Challenge 1: Usage Without Monetisation

Efficiency without pricing redesign compresses margin as clients expect faster-for-less by default.

Mitigation Strategy: Tie AI-enabled scope to fixed/outcome pricing with transparent QA checkpoints and value narratives.

Challenge 2: The “Experiment, Slowly” Trap

Slow experimentation erodes learning rates and encourages shadow AI, creating uneven quality and governance debt.

Mitigation Strategy: Time-box pilots, enforce standard playbooks, and publish visible success metrics firm-wide.

Challenge 3: Technical and Integration Debt

Fragmented tools without curation, testing, and review pipelines inflate rework and incident risk over time.

Mitigation Strategy: Centralise trust architecture—curated sources, evaluation sets, and model routing policies.

Challenge 4: Scaling Beyond Early Adopters

Initial champions mask the enablement load required for broad adoption, stalling firm-wide performance gains.

Mitigation Strategy: Fund enablement as a product: role-based training, manager coaching, and continuous evaluation.

🎯 From Usage to Value: Making Legal AI Strategic

The UK legal market has crossed the adoption chasm, but advantage accrues to firms that encode trust, monetise speed, and scale enablement—turning experimentation into an operating model change.

Three Critical Success Factors

- Human-centred enablement: Protected time, coaching, and role-based playbooks convert interest into confident, consistent use.

- Process and governance: Curated sources, evaluation sets, and review standards reduce rework and risk at scale.

- Measurement and monetisation: Track safe automation share and align pricing to capture—not donate—efficiency gains.

Reframing Success

Success is not “AI used” but “AI trusted and monetised,” evidenced by improved matter margins, lower variance to estimates, and stronger client outcomes.

The decisive shift is from hour-based productivity to outcome-based value, where speed is governed by quality and priced for impact, not discounted by default.

Bottom Line: The winners will treat AI as an operating system—trust by design, billing by design, enablement by design—turning cultural drag into durable commercial edge.

Your Next Steps

Immediate Actions (This Week):

- Inventory AI-influenced workflows and flag uncontrolled general AI usage.

- Set interim review standards and data-handling guidance for all pilots.

- Identify one high-volume, low-risk workflow for a 30‑day controlled pilot.

Strategic Priorities (This Quarter):

- Stand up a trust architecture: curated sources, evaluation sets, and approval gates.

- Redesign pricing and scoping for AI-enabled work; brief partners and GCs.

- Launch enablement: role-based prompt libraries, coaching, and office hours.

Long-term Considerations (This Year):

- Institutionalise continuous evaluation and prompt regression testing.

- Expand retrieval with firm knowledge and vetted precedents.

- Adopt “safe automation share” and margin variance as board-level metrics.

Related Articles

- Four Design Principles for Human-Centred AI Transformation - Framework for AI deployment balancing innovation with safety and organisational readiness

- SME AI Transformation Strategic Roadmap - Comprehensive structured approach to AI adoption with implementation frameworks

- Why 42% of UK Businesses Are Scrapping Their AI Initiatives - Analysis of implementation failures across sectors with prevention strategies

Source

LexisNexis, “The AI culture clash”, September 2025. Read the full report

This strategic analysis was developed by Resultsense, providing AI expertise by real people. We help organisations navigate the complexity of AI implementation with practical, human-centred strategies that deliver real business value.