🎯 The Death of “Prompt and Pray”: Why AI Needs a Specification Revolution

One open-source toolkit collapses AI delivery chaos into four repeatable phases and three high-value use cases.

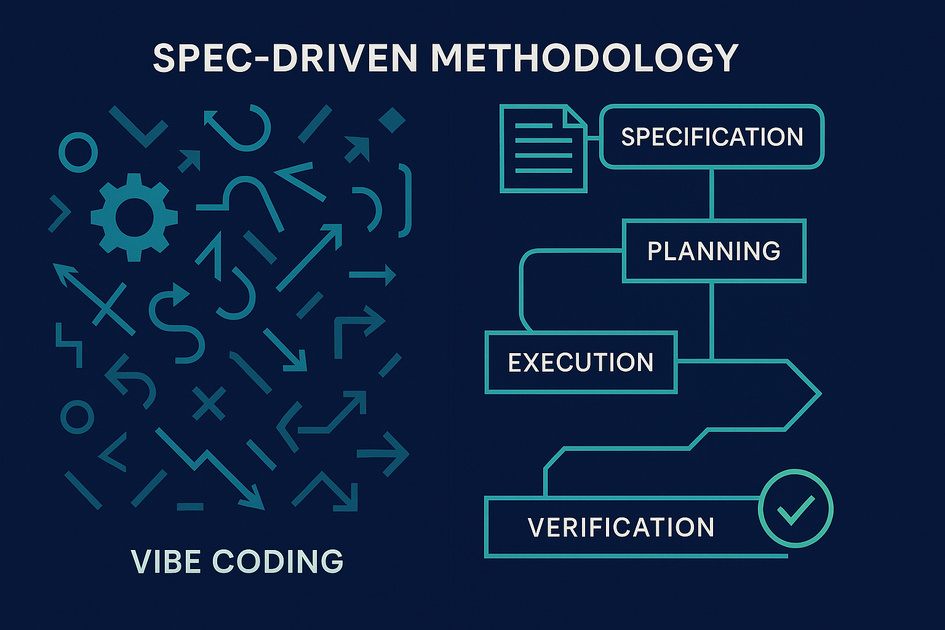

It replaces hopeful “prompt-and-pray” development with a disciplined flow that converts intent into implementable tasks for coding agents like Copilot, Claude Code, and Gemini CLI.

Key Finding: Putting a living specification at the centre shifts AI from pattern completion to predictable execution, enabling teams to steer outcomes instead of debugging assumptions.

This analysis distils what matters strategically about spec-driven development, translating the approach into an operating model leaders can scale across portfolios without slowing delivery.

📊 Why Language Models Need Living Specifications

Spec-driven development responds to a simple constraint: language models are superb at completing patterns, not reading minds, which means ambiguous prompts generate plausible code that often misses intent or violates constraints.

By moving the spec, plan, and task breakdown into first-class, executable artefacts, AI agents receive clarity on what to build, how to build it, and in what sequence, making reviews faster and rework rarer.

The approach is lightweight but opinionated: validate each phase before advancing, embed policies and architecture in the plan, and express work as small, testable tasks that agents can implement and verify.

The Real Story Behind the Headlines

This is not just a new tool; it is a governance model for AI-assisted delivery that codifies decision hygiene without adding bureaucracy.

The real innovation is separating the stable “what” from the flexible “how,” so teams can change course by updating the spec and regenerating the plan rather than rewriting swathes of code.

Crucially, organisational constraints—security, compliance, design systems—move from tribal knowledge to executable inputs, so AI aligns with the enterprise from day one.

Critical Numbers That Matter:

| Metric | Reported Value | Strategic Implication |

|---|---|---|

| Phases in the flow | 4 | Provides a minimal governance spine without heavy process overhead |

| Primary scenarios | 3 | Works for greenfield, feature work, and legacy modernisation—broad portfolio coverage |

| Named agent integrations | 3+ (Copilot, Claude Code, Gemini CLI) | Avoids lock-in; teams can standardise the method, not the vendor |

🔍 Deep Dive: From Prompts to Products

When specs become the single source of truth, AI stops improvising and starts delivering increments that map cleanly to business intent, technical standards, and acceptance criteria.

This makes planning reversible, implementation reviewable, and risk visible—before code lands—because each phase has explicit checkpoints that surface gaps early.

Critical Insight: The discipline is not more documentation—it is turning intent and constraints into executable artefacts that AI can act on, revise, and verify.

What’s Really Happening

The four-phase flow—Specify, Plan, Tasks, Implement—formalises a loop where humans steer and verify while agents generate artefacts and code, reducing cognitive load and coordination cost.

Specifications capture user journeys and outcomes, plans encode architecture and constraints, tasks decompose work into testable units, and implementation becomes a bounded review activity.

Because the “what” and “how” are distinct, teams can re-plan without binning the entire build, enabling safer iteration and faster convergence on fit-for-purpose solutions.

Success Factors Often Overlooked:

- Decision hygiene in planning: Quality plans require explicit standards, constraints, and non-functionals—the agent can only respect what is articulated.

- Context engineering for existing systems: Feature work in complex estates demands careful framing so new code feels native, not bolted on.

- Cross-functional ownership of specs: Security, design, and compliance requirements belong in the spec from day one, not in post hoc reviews.

The Implementation Reality

In practice, teams succeed when they treat each phase gate as a lightweight design review: confirm intent, confirm approach, confirm unit of work, then execute.

The payoff is focused diffs and smaller, verifiable changes, replacing thousand-line dumps with incremental improvements that map to clear tasks.

⚠️ Warning: The biggest risk is “spec rot”—if teams fail to update specs and plans as learning emerges, the process collapses back into vibe coding with bigger artefacts.

💡 Strategic Analysis: Portfolio-Ready AI Delivery

This method scales because it aligns with how organisations govern risk: articulate intent, set constraints, validate increments, and retain options to pivot without sunk-cost trauma.

It also normalises the handoff between product, platform, and delivery teams by making the spec and plan the boundary objects everyone can inspect, test, and evolve.

Beyond the Technology: The Human Factor

Spec-driven delivery reassigns accountability: humans curate intent and constraints, while agents generate and implement, which requires trust in the process as much as trust in the model.

Middle managers become orchestrators of clarity—ensuring standards, dependencies, and sequence are visible—rather than gatekeepers of ad hoc approvals.

Front-line teams experience fewer context switches because tasks are pre-validated and sized for review, improving flow and reducing cognitive fragmentation.

Stakeholder Impact Assessment:

| Stakeholder Group | Primary Impact | Required Support | Success Metrics |

|---|---|---|---|

| Executive Leadership | Increased predictability via phased checkpoints tied to intent and constraints | Portfolio-level templates for specs and plans; governance alignment | Throughput of validated increments; change lead time reduction |

| Middle Management | Clearer orchestration of standards, dependencies, and sequencing | Playbooks for phase reviews; context engineering guidance | Fewer rework cycles; conformance to architecture and policy |

| Front-line Teams | Smaller, testable units with focused reviews and reduced rework | Task sizing norms; acceptance criteria patterns; agent usage tips | PR size/variance; defect escape rate per task |

| Customers/Users | Faster convergence on usable features aligned to stated outcomes | Continuous validation rituals from “Specify” through “Tasks” | Time-to-first-value; satisfaction on outcome metrics |

What Actually Drives Success

The measure of maturity is not “more code faster,” but the fidelity between intent, plan, and implemented increments across cycles, which this approach makes observable.

Teams that excel use the phase gates as learning compasses, updating specs and plans quickly so the model’s next pass gets smarter, not just busier.

🎯 Success Redefined: Success is the rate of validated intent-to-increment conversion, not prompt-to-code velocity, because only validated increments reduce future risk.

🚀 Your Implementation Roadmap: From Pilot to Portfolio

Based on our analysis, here’s a practical implementation framework for organisations considering similar initiatives:

💡 Implementation Framework

Phase 1: Foundation (Months 1-3)

- Create organisation-wide templates for Specify, Plan, and Tasks, embedding security, compliance, and design rules up front.

- Establish lightweight phase reviews with clear acceptance criteria and decision logs to prevent spec rot.

- Pilot with one cross-functional squad and one agent tool, focusing on small features in an existing codebase.

Phase 2: Pilot & Learn (Months 4-6)

- Run two parallel pilots: greenfield and feature work, to exercise distinct risk profiles.

- Instrument intent-to-increment metrics: plan conformance, PR size, rework rate, and time-to-first-value.

- Formalise context engineering practices for legacy and integrations to ensure “native-feel” changes.

Phase 3: Scale & Optimise (Months 7-12)

- Roll out templates and reviews across tribes; enable multiple agent tools to avoid lock-in to any vendor.

- Introduce continuous spec and plan diffing to drive change safety and architectural integrity.

- Codify a governance cadence that updates policies and patterns based on pilot learnings quarterly.

Priority Actions for Different Contexts

For Organisations Just Starting:

- Standardise a minimal spec template that captures user journeys and outcomes, not tech stacks.

- Define architecture and constraint checklists for the Plan phase, including security and compliance.

- Avoid multi-feature pilots; start with a single feature in an existing system to exercise context integration.

For Organisations Already Underway:

- Add explicit phase gates with acceptance criteria and decision logs to stabilise quality.

- Introduce task-sizing norms so agents generate small, verifiable work items with clear tests.

- Reassess where specs and plans live to ensure they are living artefacts—stop burying them in wikis.

For Advanced Implementations:

- Automate spec/plan drift detection and PR conformance checks to guard against silent divergence.

- Explore portfolio-level spec patterns for recurring domains and shared services.

- Combine spec-driven practices with advanced context engineering to accelerate complex refactors.

⚠️ Hidden Challenges Nobody Talks About

Challenge 1: The “looks right” trap

AI-generated outputs can appear coherent while quietly violating non-functionals or enterprise patterns if constraints are not explicit in the Plan.

Mitigation Strategy: Treat the Plan as the boundary for quality—encode performance, security, and platform standards the agent must respect.

Challenge 2: Governance theatre

Heavy process without real verification creates the illusion of control while specs and plans go stale.

Mitigation Strategy: Keep gates lightweight but binding; require evidence of validation before advancing phases.

Challenge 3: Integration and legacy drag

Without context engineering, feature work can feel bolted on and accumulate technical debt.

Mitigation Strategy: Use Tasks to enforce isolation and “native feel,” and update specs with discovered integration rules.

Challenge 4: Scaling inconsistency

Different teams interpret templates differently, eroding comparability and predictability.

Mitigation Strategy: Publish exemplars, run calibration reviews, and instrument shared metrics across squads.

🎯 Make the Spec the Truth: Your Next Steps

Spec-driven development converts AI from a hopeful assistant into a reliable delivery engine by centring intent, constraints, and verification in a simple, repeatable flow.

It balances autonomy with control: agents generate and implement, while humans steer and validate through minimal but meaningful checkpoints.

Three Critical Success Factors

- Shared ownership of intent: Keep specs living and cross-functional so constraints and outcomes are first-class, not afterthoughts.

- Executable governance: Encode standards in the Plan and Tasks so conformance is automatic, not enforced after the fact.

- Validated increments: Measure intent-to-increment throughput, not just prompt-to-code speed, to reduce future risk.

Reframing Success

Success is not shipping more code faster; it is shrinking the distance between declared intent and deployed behaviour across iterations.

The four-phase, checkpointed flow gives leaders control without throttling flow because changes propagate via artefacts, not heroics.

Enterprises that operationalise this method gain a portfolio-level advantage: predictable delivery, faster pivots, and fewer costly rewrites.

Bottom Line: Make the spec the truth, the plan the constraint, the task the unit of value—and the agent will do the rest.

Your Next Steps

Immediate Actions (This Week):

- Stand up a minimal spec and plan template aligned to security, compliance, and design standards.

- Pick one in-flight feature and run it through Specify → Plan → Tasks before further coding.

- Instrument baseline metrics: PR size, rework rate, time-to-first-value.

Strategic Priorities (This Quarter):

- Establish phase gate reviews and decision logs across two pilots with different risk profiles.

- Publish exemplars and playbooks for context engineering in existing systems.

- Introduce conformance checks comparing PRs against Plans and Tasks.

Long-term Considerations (This Year):

- Automate drift detection between specs, plans, and code; scale metrics across squads.

- Build portfolio-level spec patterns for common services and domains.

- Institutionalise “intent-to-increment” as a north-star metric.

Source: GitHub Blog: Spec-driven development with AI: Get started with a new open source toolkit

This strategic analysis was developed by Resultsense, providing AI expertise by real people. We help organisations navigate the complexity of AI implementation with practical, human-centred strategies that deliver real business value.